Use BCs And TCs To Improve LTE And LTE-A Infrastructure Timing

This file type includes high resolution graphics and schematics.

In its most recent Mobile Infrastructure and Subscribers Report, market research firm Infonetics forecast that the LTE market would almost double in 2013, easily passing the $10 billion mark for the first time. This explosive growth will cause growing pains as cellular carriers struggle to transform the largely circuit-switched network created for voice into an IP-based (Internet protocol), very fast packet-switched network capable of carrying video to millions of subscribers simultaneously.

Not the least of the challenges that wireless carriers face will be posed by a decision they made years ago to use Global Positioning System (GPS) signals to provide time-of-day (ToD) synchronization for the basestations in their legacy time-division-multiplexed (TDM) backhaul networks. This choice always involved some complications. In the macro-cell era, areas such as urban canyons that did not have clear line-of-sight (LOS) access to GPS satellites, for example, had to be treated as special cases.

In the coming 4G/LTE era, however, precise timing is the sine qua non for media such as teleconferencing and video. As a result, using GPS for ToD synchronization is already creating a much bigger and widespread challenge that will ultimately require moving to a new synchronization solution. Precise timing is also important because it directly affects radio spectral efficiency and data throughput.

The Next Step

Most mobile basestations use GPS/GNSS to acquire an all-important signal known as 1 pps, which is used to make the ToD calculation for next-second rollover and to synthesize the fundamental source radio frequencies. Since 4G/LTE will markedly expand the use of picocells and femtocells to ensure coverage and performance, the GPS/GNSS solution becomes even more problematic.

Related Articles

• Understanding Small-Cell Unification’s Vital Role In LTE And 4G

• An Introduction to LTE-Advanced: The Real 4G

• LTE Requires Synchronization And Standards Support

Urban environments—and small cells located indoors—typically don’t have an unobstructed LOS to the satellites. To make matters worse, the availability of inexpensive GPS jamming and spoofing technology is already a rising cause of concern among mobile infrastructure planners.

4G/LTE’s use of advanced technologies for beam forming such as multiple input multiple output (MIMO) and coordinated multipoint transmission (CoMP) also rely heavily on precise phase alignment between basestation radios. In brief, timing can be everything for 4G/LTE.

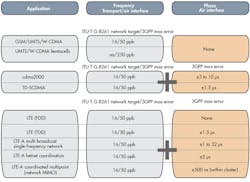

Figure 1 shows the relative criticality of timing for phase and frequency in various cellular network generations. The column comparing phase alignment offers the most striking data: from 0 for GSM to 500 ns for LTE-Advanced (LTE-A) coordinated multipoint (network MIMO). The ToD timing requirements are also critically important.

Comparisons with previous cellular generations are just as striking. GSM, for example, has no ToD timing accuracy requirement. UTMS TDD requires ≤2.5-µs accuracy. TD-SCDMA requires ≤3-µs accuracy. On the other hand, LTE-A/MIMO requires ≤0.5 µs, and LTE-A with interband aggregation requires ~0.1-µs accuracy.

The good news is that the transition from SONET/SDH or PDH E1/T1 to IP/Ethernet backhaul networks is already well underway and will continue for several years. The new backhaul network will be a more cost-effective broadband packet network that delivers vastly more bandwidth to basestations at significantly lower cost per bit.

Toward A Timely Timing Solution

Mobile carriers already deploy Precision Time Protocol (PTP) as described in IEEE 1588 in their backhaul networks. It uses time-stamped packets to implement frequency and time/phase distribution and a protocol exchange to derive the actual time from the time-stamped packets.

By necessity, carriers roll out infrastructure upgrades incrementally, which means that some basestation nodes are or will be implementing PTP while others are not. Sending PTP traffic though PTP-unaware network elements creates a packet delay variation (PDV) that’s cumulative across the network. An intelligent low-pass filtering algorithm in the PTP ordinary clock slave can slowly filter out these delays.

This file type includes high resolution graphics and schematics.

Unfortunately, filtering allows the slave to recover the frequency but not the equally important ToD and phase alignment parameters. Moreover, the long time constants of the filtering algorithm result in very long frequency acquisition/settling times and also the likelihood of long-term phase wander. Most of the “secret sauce” in intelligent PTP frequency algorithms involves keeping this long-term wander in check.

A good deal of technology backfilling is being applied in attempts to correct the problems of very long frequency acquisition/settling times and the network’s susceptibility to long-term phase disorientation. Standards-making bodies are also working toward a more comprehensive solution. But the upshot is that attempts to tweak the basic PTP protocol can provide a viable frequency-only solution but not a synchronization solution for phase and ToD.

As a result, the only way to attain accurate ToD and phase delivery over the network with GPS out of the picture will be to provide “PTP on-path support.” All network elements between the PTP master clock and the PTP ordinary clock slaves must contribute to eliminating or significantly reducing the impairments introduced by static asymmetries in network links as well as the PDV caused by variations in packet traffic through these elements.

Problem Analysis

The first difficulty arises because the timing information is encapsulated in standard IP data packets and passed between network nodes. The same PDVs that produce jitter in packet networks also introduce timing inaccuracies in the recovered clock.

Clock recovery is problematic as well. The PTP protocol’s timing recovery mechanism works on the assumption that there is fixed and symmetric latency between network nodes—a condition that’s rarely true in real-world networks. As previously mentioned, synchronization error caused by PDV and asymmetry is cumulative along the path between the network node generating the master clock and the basestation recovering the clock.

The problem is even more complicated when microwave links are used for backhaul. Unlike wired connections, latency microwave links change dynamically as a function of the modulation format being used or as atmospheric properties vary with changing weather.

A second set of issues stems from the incremental approach carriers must employ for infrastructure upgrades. Without careful planning, delivering the timing precision required to support advanced wireless technologies during these upgrades can quickly become difficult and expensive.

Phase and ToD synchronization are required to eliminate the timing errors created by large asymmetric queuing delays common in switches and routers as well as asymmetric modem delay variations for microwave and wired links. But as discussed earlier, the utilization of GPS for timing synchronization is problematic at best.

IEEE 1588v2 To The Rescue

To address the challenge, later versions of the IEEE 1588 standard defined two additional clock types: the boundary clock (BC) and the transparent clock (TC). Both provide on-path support to network elements in a packet-based network. A revised standard, IEEE 1588v2, incorporates the BC and TC concepts.

A node that implements a BC regenerates the timing based on the timestamps it receives. In addition to timestamps, a BC requires timestamp correction, a reliable PDV filtering algorithm, and an IEEE 1588v2-aware timing complex that can be synchronized to the network. This means that implementing a BC node requires a very precise oscillator, a digital phase-locked loop (PLL), and a microprocessor. BCs are expensive and complex to implement, but they can perform the port-level accurate timestamping required to meet LTE and LTE-Advanced synchronization.

TC nodes are simpler and less expensive to implement because they simply forward incoming timestamps after correcting errors introduced in the node. A TC can also reuse the physical layer (PHY) or switch that it encounters in the datapath, provided the device is 1588v2-compliant (i.e., it has the added logic required to support accurate timestamping and timestamp correction mechanisms).

A port-based PHY solution is sufficient to implement a highly accurate TC node. Several PHY and switch silicon solutions available today incorporate timestamping correction that compensates for network PDV and asymmetry. At least one of these solutions, Vitesse’s VeriTime chipset, includes compensation mechanisms that can reliably maintain a 1588v2 timing domain across microwave links. Clearly, any cost-effective solution to the ToD dilemma maximizes the utilization of TCs and minimizes the number of BCs in the network.

Network Deployments

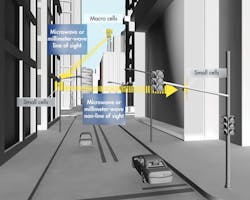

Care must be taken when creating a 4G/LTE backhaul architecture that can be deployed incrementally and still maintain timing integrity during an incremental upgrade/build-out. Since 4G/LTE will markedly expand the use of picocells and femtocells to ensure coverage and performance, TCs must play an important role. They are well suited for picocell synchronization in outdoor environments and synchronization down to the femtocell in large indoor multi-floor installations (Fig. 2).

For picocells, TCs can be carried over microwave and millimeter-wave links to satisfy TD-LTE and LTE-A requirements while eliminating GPS/GNSS signals or fiber links. The simpler hardware of TCs fits the model of the small-cell basestation requiring small footprints, low cost, and minimal power consumption.

Low power consumption is particularly important for wireless operators with LTE licenses in higher frequency bands (1900 MHz and the 2.x-GHz bands) with limited indoor coverage. Indoors, the access network itself can generate 1588v2 timing, or in some instances a GPS antenna on the roof of the building can generate time packets for synchronization services inside the building. Furthermore:

• BCs should be deployed throughout the network strategically to reduce the traffic load on the grandmaster clock.

• Timestamping should be performed at the PHY ingress/egress point to eliminate serialization and deserialization delay asymmetries.

• Microwave backhaul equipment should position TCs across each link to minimize time errors from the modem and adaptive modulation schemes that can easily exceed the overall time error budget of the network.

• Accurate timestamping is best done at the PHY I/O interface. With TCs implemented in the backhaul network, timestamping at the PHY/IO interface can lead to simpler servo algorithms that translate to cost-effective PTP clock slave implementations.

By following these guidelines, a CAPEX-constrained (capital expenditures) operator can move a few key network elements to BC support, paving the way for use of efficient, less costly network elements that use TC clocks during high-volume deployments. This strategic approach to backhaul timing architectures allows carriers to quickly roll out networks of smaller cells that improve their coverage and capacity in a cost-effective manner.

This file type includes high resolution graphics and schematics.

Conclusion

The most cost-effective upgrade strategy for LTE-A and future small-cell networks is to deploy distributed TCs liberally and BCs only where necessary to segment timing domains. The use of TCs can increase timing accuracy to the nanosecond range, as shown by Vitesse in a recent submission to the ITU-T standards committee. Silicon advances available today will ensure that such solutions will carry only a nominal premium over non-IEEE 1588v2 aware systems designed for 3G networks today.