Artificial Intelligence Arrives at the Edge

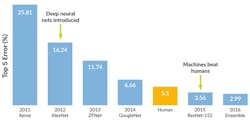

Research into artificial intelligence (AI) has made some mind-blowing strides, expanding the usefulness of computers. Machines can do certain tasks faster and more accurately than humans. A great example is the ILSVRC image classification contest using a type of AI called machine learning (ML). Back in 2012, AlexNet won this contest, being the first to use deep neural nets and GPUs for training. By 2015, ResNet-152 beat humans at classifying images (Fig. 1).

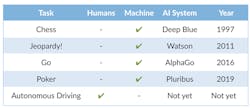

Other examples where computers are better than humans include games. Figure 2 summarizes a few examples where machines beat human champions along with a non-gaming case where humans are still better.

Clearly machine learning is providing some amazing new capabilities that are essential for applications such as the smart home, smart retail, the smart factory, and the smart city, but can be leveraged by a wide range of businesses today. This can be seen in the dramatic growth of ML cloud services available from providers such as Amazon AWS SageMaker, Microsoft Azure ML, and Google Cloud ML Engine.

Push for the Edge

Until recently, the focus for ML has centered around the cloud running huge centralized computer centers due to the vast compute and storage resources available. This is shifting rapidly to the edge for a number of reasons, including:

- The cost of cloud processing, storage, and bandwidth preclude sending data to the cloud to make AI-enabled decisions

- A better user experience requires making fast AI-enabled decisions at the edge

- Privacy and security concerns limit what data is stored in the cloud

- Higher reliability

All of these factors together make the edge the obvious place to put ML processing for many applications. That’s why NXP announced the i.MX 8M Plus applications processor, claimed as the first i.MX applications processor with a dedicated machine-learning accelerator.

The i.MX 8M Plus uses the 14-nm FinFET process node technology for low power with high-performance and has a number of new features including dual-camera image signal processors (ISPs) that support either two low-cost HD camera sensors or one 4K camera sensor for face-, object-, and gesture-recognition ML tasks. It also integrates an independent 800-MHz Cortex-M7 for real-time tasks and low-power support, video encode and decode of H.265 and H.264, an 800-MHz HiFi4 DSP, and eight pulse-density-modulation (PDM) microphone inputs for voice recognition. Industrial IoT features include Gigabit Ethernet with time-sensitive networking (TSN), two CAN-FD interfaces, and ECC.

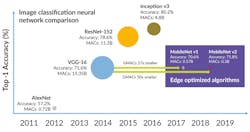

Helping to accelerate the machine-learning-at-the-edge trend, data scientists are optimizing specific algorithms for resource-constrained devices being deployed at the edge. MobileNet is an image classification algorithm developed by Google with a focus on high accuracy while significantly reducing the amount of compute resources needed.

Figure 3 shows the dramatic reduction in processing. Going from the VGG-16 model to the MobileNet v2 model reduces the amount of compute needed at the edge by 50X. This enables a resource-constrained hardware solution at the edge to do sophisticated ML processing.

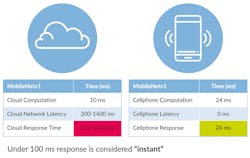

In comparison, running MobileNet v1 at the edge in a mobile phone is significantly faster than running it in the cloud. The difference is achieved by eliminating cloud network latency. Network latency can easily add between 200 ms and over 1.4 seconds, significantly delaying the response. The goal is a response under 100 ms—appearing instantaneous to the user (Fig. 4).

Figure 5 illustrates some of the many applications enabled by running machine learning at the edge.

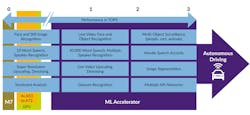

As shown in Figure 6, each of these use cases needs a certain level of performance that determines what level of hardware is needed to run it.

It makes sense to run ML applications at the edge for the reasons already mentioned. However, a few more requirements must be met to have a successful deployment:

- Ecosystem for ML developers—make it easy to implement

- Hardware security—guarantee privacy and security

- New, innovative hybrid SoC architectures—provide cost-effective solutions

- Scalable and secure edge deployment—make it easy to deploy

Ecosystem for ML Development: eIQTM

Breakthrough ML applications require a design and development ecosystem that’s up to the task. Along those lines, NXP created the innovative eIQTM or Edge Intelligence tools environment, providing the tools a customer needs to deploy their ML technology. The eIQTM ML software-development environment (Fig. 7) includes inference engines and libraries leveraged from advances in open-source machine-learning technologies.

Deployed today across a broad range of advanced AI development applications, NXP’s eIQ software brings together inference engines, neural-network compilers, and optimized libraries for easier, holistic system-level application development and machine-learning algorithm enablement on NXP processors. eIQ supports a variety of processing elements for ML including Arm Cortex-A and Cortex-M processors, GPUs (graphics processors), DSPs, and ML accelerators.

NXP has deployed and optimized these technologies, such as TensorFlow Lite, OpenCV, CMSIS-NN, Glow, and Arm NN, for popular RT and i.MX applications processors. These are accessed through the company’s development environments for MCUXpresso and Yocto (Linux) to help provide seamless support for application development. eIQTM software is accompanied by sample applications in object detection and voice recognition, to provide a starting point for machine learning at the edge.

The eIQ Auto toolkit is a specialty component of the eIQ machine-learning software-development environment, providing an Automotive SPICE-compliant deep-learning toolkit for NXP’s S32V2 processor family and advanced driver-assistance system (ADAS) development. This technology offers functional safety, supporting ISO 26262 up to ASIL-D, IEC 61508, and DO 178.

Edge Security: EdgeLockTM

Security at the edge is critical. Needed capabilities include a secure-boot trust anchor, on-chip cryptography, secure provisioning, mutual device authentication, secure device management, over-the-air (OTA) secure updates, and lifecycle management.

To support this, NXP created the EdgeLock portfolio, delivering secure elements, secure authenticator, and embedded security to application processors and microcontrollers. EdgeLock brings integrity, authenticity, and privacy to the edge node and provides security from the edge to the gateway and the cloud.

Affordable Edge AI

eIQTM brings ML to NXP’s existing line of SoCs, leveraging the CPU, GPU, and DSP. However, even the fastest CPUs are inefficient at executing highly complex neural networks. Going forward, the company is creating a new family of hybrid AI SoCs combining a state-of-the-art embedded SoC with the latest in AI/ML hardware neural-processing-unit (NPU) technology for both application processors and microcontrollers. The result leverages existing SoC applications and adds the parallel compute power of an ML accelerator.

Future

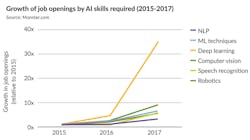

The pace of change in the AI landscape is accelerating. Figure 8, from the AI Index 2018, shows the growth in deep-learning job openings, and Figure 9 illustrates the mentions of AI and machine learning on company earning calls.

AI and machine learning are creating a seismic shift in the computer industry that will empower and improve our lives. Taking it to the edge will accelerate our path to a better tomorrow.