Neural-Network Compiler Adds a Glow to Micros

These days, neural networks (NNs) are synonymous for machine learning (ML) and artificial intelligence (AI) even though they’re only part of these arenas. Still, deep neural networks (DNNs) are what’s hot and driving the adoption in everything from health monitoring to stock trading. It’s allowing self-driving cars to be practical and motor controllers to provide predictive-maintenance information to the cloud.

Today, ML hardware acceleration garners the bulk of the mindshare, but those lowly micros without it are equally applicable to handling ML chores, albeit less demanding on the computation side. One of the tools needed for this to occur are compilers that take ML models and turn them into code. This is where the open-source Glow compiler comes into play.

The Glow compiler is part of the PyTorch ML framework. It spans the gamut of hardware and software platforms from the cloud, to Microsoft Windows on the desktop, to micros controlling motors. Facebook developed the original Glow compiler.

NXP added support for Glow in its eIQ Machine Learning Software. The company’s implementation of the Glow compiler targets Arm Cortex-M cores and Cadence Tensilica HiFi 4 DSPs. This includes its i.MX RT series of crossover MCUs. The NXP support incorporates platform-specific optimizations that take advantage of ML hardware acceleration.

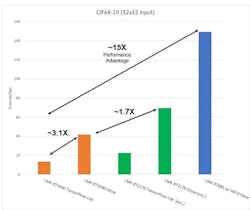

The Glow NN compiler does better than TensorFlow Lite for comparable ML models (see figure). The i.MX RT685 includes a Tenisilica HiFi4 DSP that delivers the major performance advantage over non-ML accelerated hardware. Part of the boost comes from utilization of Cadence’s Neural Network Library (NNLib), the source of the Tensilica HiFi4 DSP architecture.

The MCUXpresso SDK that supports the hardware and Glow compiler is available for free from NXP. PyTorch support includes ONNX models and Microsoft’s MMdnn toolset.

As with most embedded applications, developers need to determine what algorithms and applications will be applicable to their solution. They also must pinpoint the capabilities that are possible given the constraints of the target platform, the time available to craft a solution, as well as the requirements of the application and the capabilities of the developer, tools, and target. This job becomes more challenging when one considers the range of possible ML models, the NN compilers, etc., involved in building such a system.

NNs are no different than using something like a fast Fourier transform (FFT) to provide the needed results that can be utilized by another portion of the application. Granted, NN tasks are often more computationally demanding, but this simply means that the appropriate tools and systems must be employed. The big difference with NN solutions is the range possibilities, tradeoffs, and optimizations available to a developer. The NXP Glow compiler support is just one aspect of this, but it can provide a performance boost that may make hardware acceleration unnecessary or allow something like voice or image recognition possible where it wouldn’t have been before.