The Internet of Things (or Internet of Everything, as some call it) is the next step in connected technology. It will encompass new devices designed specifically for the IoT, as well as existing technologies upgraded to include Internet connectivity. The IoT is expected to number in the billions of devices, and every one of those devices will have one common trait: the need for memory.

The IoT represents what Micron calls the connected world, and includes myriad devices designed to enrich our life experiences. It covers virtually every element of the Internet and networking market segments (which is why Cisco likes to refer to the IoT as “The Internet of Everything”). The full range of use cases for IoT devices can be categorized into three main areas:

• Traditional Internet: This is the Internet we all know. It will be the traffic backbone for almost all new devices and many already-connected devices. PCs, smartphones, and tablets are part of the IoT.

• Industrial Internet: This is where most of the machine-to-machine (M2M) exchanges will take place and where sensors and other data transmission and receiving devices will reside. Here, embedded systems will be able to operate without user interaction, in everything from traffic lights and power grids, to city infrastructure and industrial machinery.

• Consumer Internet: This covers things like wearables and smart devices, where much of the growth will take place as new products emerge—smart refrigerators, advanced watches, and wrist devices, as well as visual devices (e.g., Google Glass and Oculus Rift).

This file type includes high resolution graphics and schematics when applicable.

The IoT will bring connectivity, communication, and data gathering to existing devices, such as cars and home appliances, and allow for massive data collection without any human interaction. For example, the IoT-enabled car of the future will feature dozens of sensors, providing drivers with an active and useful health/performance assessment at all times. Instead of useless “check engine” lights that leave you guessing at what may be wrong, cars of the future will alert drivers when the tire pressure is low or the muffler is about to fail, before anything actually fails or stops working.

Or take the IoT-enabled refrigerator of the future, which will alert users of food spoilage, food recalls, or if a certain food staple is running low, such as milk or eggs. Flat-panel manufacturers are also working to replace the solid door so that users can see inside the refrigerator without opening it, and/or receive advertisements for sales at the local market and other Internet information displayed right on the refrigerator door.

No wonder the tech industry is so focused on the IoT. On the business side, it represents a massive new market ripe for growth, as opposed to the relatively mature, slower-growth markets like PCs and smartphones. On the technology side, IoT represents a new wave of innovation to enable devices to collect data and act upon it in real time—or at least at a much faster rate. It also involves finding new ways to get more information about consumers, with a need to balance targeted advertising with privacy concerns.

Such interaction is the essential difference between the IoT and the Internet as we know it now. The way we currently use the Internet generally requires human involvement—whether it’s web browsing, checking email on a smartphone, or streaming video to a TV. In virtually all use cases, a human is required at one end point to make an interaction.

For the most part, the IoT doesn’t require human interaction. Human interaction or input is needed in some instances, but many IoT applications rely entirely on M2M communications facilitated by sensors and data-reading technology that requires no human intervention. Data is constantly gathered and sent back to a central server of some kind for analysis, for a variety of uses.

In the home, this could mean smart appliances that detect an impending failure or excessive power consumption, or a home security system that’s controllable with a smartphone.

The IoT promises a new and better world, but it’s almost being built from scratch. It will require a new generation of smaller, more power-efficient devices that can handle all types of data. At every step, it will be memory that drives the connected world. Whether it’s embedded and mobile devices or data centers, memory technologies enable the capture, transmission, analysis, and storage of data up and down the network infrastructure to power the IoT. Just as the Internet and its endless use cases have made it an essential part of our everyday lives, so too will be the case with the IoT.

The IoT’s Multiple Interconnected Layers

As a concept, the IoT isn’t new. People have discussed it at conferences and across multiple industries since the late 1990s. A Proctor & Gamble executive claims he coined the term in 1999, and the reason it took so long to come to market is that like many advanced concepts, the technology had to catch up to the idea. The technology industry began trying to make tablets in the 1990s, but it wasn’t until 2010 that Apple finally got it right.

Such is the case with the IoT. Many technologies had to come of age before IoT was possible, including:

• Extreme miniaturization: Chips have shrunk over the years, but to make the IoT work, we needed something even smaller than what’s found in a smartphone. With process technology down to 14 nm and heading to 10 nm, chips are getting to the point of not being easily seen. Early in 2014, Freescale introduced an IoT-ready chip that could fit inside the divot of a golf ball.

• IPv6: For several years, networking engineers have sounded the alarm that IPv4 addresses are rapidly running out. The American Registry for Internet Numbers (ARIN) first brought this to bear in 2007, because while IPv4 provides 4.3 billion addresses, many are unavailable to the public. IPv6 has 340 undecillion addresses; therefore, essentially, they should never run out.

• Wi-Fi and LTE ubiquity: For the IoT to be wireless, high-speed LTE will need to cover much of the nation, and Wi-Fi in homes will need to reach critical mass. Today, Wi-Fi modems are a standard feature on cable modems and LTE covers most of the nation. Verizon Wireless claims to cover 97% of America, while AT&T claims about 300 million of the nation’s 320 million POPs are covered by its LTE network.

• Scaled-out data centers: With 28 to 30 billion devices expected to constitute the IoT, the amount of data will be massive. The CSO of machine-data specialist Splunk told Gigaom, a research and new organization, that the average smart home will generate about 1 GB of data per week. This will put tremendous strain on data centers and will require far bigger data pipes and edge servers to handle and act upon all of the data coming in. The data center of the future will be much more active than traditional data centers, which often just serve as big data stores.

The IoT consists of four interconnected technology layers:

• Embedded: Embedded IoT refers to things like home appliances, cars, machinery, airplanes, and home electronics. According to research analyst IDC, data from embedded systems alone currently accounts for just 2% of the digital universe, but that number is expected to rise to 10% by 2020.

• Mobile: Mobile connected devices will include phones, tablets, watches, GPS, wearables, cars (some overlap from the embedded side) and will constitute the bulk of IoT devices. IDC forecasts data generated by mobile devices and people will reach 27% in 2020 and the percentage of mobile devices supporting IoT will be more than 75% by 2020.

• Data center: Research analyst Gartner has said that the IoT will fundamentally transform how data centers operate, because they will have to accept considerably more data and act upon it with expedience. This will mean a number of changes to how data centers are designed, built, and operated. Security must be increased due to the sensitivity of data. Real-time business processes will be needed. Data centers will have to employ big-data styles of computing, which isn’t typical of today’s average data center. And storage management will have to adapt to handle increased volume.

• The network: Just as important as the devices and data centers is the network infrastructure, which will facilitate the connection between the devices and the cloud. The Internet, as it’s currently configured, serves us well, but changes are needed to handle the massive scale of data generated by the IoT.

IPv6 will be a big help, providing millions of new connections with unique IP addresses. However, other advances must be made, particularly related to the security of wireless devices. The network of the IoT isn't just the Internet; it’s Wi-Fi, LTE, 5G cellular, Bluetooth, and more. Stories have already surfaced about Bluetooth device hacking. Now, many enterprises advise their staff to not use their devices at coffee shops and other public Wi-Fi locations.

Storage is also paramount because the IoT is about gathering immense amounts of data. Whether that data is streamed immediately to a central server or gathered and analyzed before transmission, storage is key, and in many instances, that storage is likely memory— either volatile or nonvolatile. This represents a key IoT area for Micron.

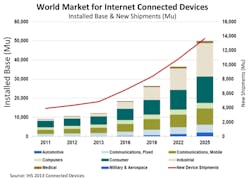

Gartner estimates there will be nearly 26 billion IoT devices by 2020, while ABI Research estimates more than 30 billion devices by that same timeframe. This represents an enormous opportunity for any company that becomes a leader in the IoT field—one that can understand and leverage advances in connectivity and network bandwidth to develop innovative devices and new applications.

Advancing The IoT With Memory Technology

A rapidly evolving IoT application environment also means rapidly evolving memory requirements. Shrinking IoT device form factors intensify processing, performance, and data storage demands. As a result, developers realize they must rethink how their design goals can be achieved by using memory in new and innovative ways. In many cases, this involves using new, or less familiar, memory technologies and examining memory much earlier in their design cycles.

High-Speed Serial NOR Flash

IoT sensors will need to be different than today’s everyday sensors and embedded processors, because they must be kept in live mode all of the time rather than spend time in standby mode, waiting to be awakened. This demands chips that can process data, as well handle its constant input and output. Thus, high-speed flash-memory technologies will be required to handle high-performance code execution and storage for instant-on applications.

MCPs and e·MMC

Many IoT devices will use multichip-package (MCP) designs that incorporate the CPU, GPU, memory, and flash storage in one chip. They’re being optimized by CPU makers for this marketplace because the density and power requirements differ from those of smartphones or tablets, which also use MCPs.

Flash memory must be present in an MCP for any long-term storage. Heavy-duty data capture requires data analytics to decide what to discard and what to send to the cloud. To handle this, Micron is working on e·MMC and eMCP (a MCP package with low-power memory). Specifically, e·MMC is managed NAND flash memory with a controller in a BGA package that operates over a –25 to 85°C temperature range. Currently, e·MMC densities range from 2 to 64 GB, but higher-density products that draw less power are in the works.

LPDDR4

Another important development is LPDDR4, or low-power DDR4 memory. LPDDR4 is just emerging onto the market, offering much higher performance and a reduced power draw. LPDDR3 drew about 1.5 V of power and maxed out at about 2133-MHz performance. LPDDR4 offers up to 3200-MHz performance with a 1.2-V draw.

LPDDR3 performance is more than capable for most IoT scenarios. However, with 64-bit CPUs, DDR3 doesn’t have the bandwidth to properly feed data to the CPU without increasing the power draw. It is possible for LPDDR3 memory to provide the required bandwidth, but it comes at a cost. LPDDR4 offers the necessary bandwidth at lower power for servers as well as embedded devices.

Because LPDDR4 has a different interface from LPDDR3, chipset vendors are currently wrestling with the architectural change. This will drive new form factors for chipsets and devices.

Flash storage

The SSDs in your PC, tablet, or smartphone are nothing compared to what’s coming for the data center. Chip density is increasing, and the future promises storage capacity that will surpass hard drives. For example, with every NAND generation, Micron doubles the number of gigabytes per wafer and increases the gigabits per die.

NAND currently can hold up to 128 Gb per die, and is making the move to 256 Gb. When a 512-Gb die finally arrives, it could mean an SSD that holds as much as 25 TB—no single hard drive comes even remotely close to that amount. It’s achieved by stacking 8 to 16 die in one packaged part, which adds up to 1 TB of capacity. Put 16 parts on a board and you’re talking about a 16-TB flash board.

Emerging Technologies

Most current technologies simply can’t handle the smaller form factors or data deluge of the IoT. LPDDR4, in its current incarnation, is an exception because it provides the bandwidth and power savings needed, with iterative improvements expected in the future. However, memory, in general, needs to become smaller and more power efficient.

This file type includes high resolution graphics and schematics when applicable.

To help meet those demands, Micron developed a DRAM technology called Hybrid Memory Cube (HMC), first announced in 2011. HMC delivers 15 times the bandwidth of a DDR3 module. It uses 3D packaging of multiple memory die stacked on top of each other, usually four or eight die per package, connected with through-silicon vias (TSVs) and microbumps.

HMC uses standard DRAM cells, but it has a completely different interface compared to DDR memory. The DRAM controller is in the CMOS base and the internal memory architecture was changed to improve bandwidth. It integrates more data banks than classic DRAM memory of the same size, allowing for denser memory.

In addition to a 15-fold performance increase, HMC consumes 70% less power than DDR3. It also offers five times the bandwidth of traditional DDR4.

HMC is primarily aimed at high-performance computing and networking that deal with massive amounts of I/O. For example, Intel’s Xeon Phi co-processor is among the first processor technologies to use HMC.

Another new technology from Micron, the Automata Processor (AP), is not a traditional CPU—it doesn’t use memory as a read/write storage device. Rather, memory forms the basis of a processing engine that analyzes information as it streams through a memory chip.

The big difference between the Automata Processor and a traditional CPU is that the AP doesn’t have a fixed execution pipeline. Instead, its 2D fabric of tiny processor elements works on thousands or even millions of different questions about data at the same time for massive parallelism. The AP sits right on the DIMM, so there’s no need for CPU-to-memory transfers or a CPU bus to slow things down.

Also, the AP is reprogrammable and reconfigurable, more like an FPGA in that regard. The chip essentially becomes the processor defined by the user, making it a self-operating machine that’s driven only by the data it receives, not by instructions.

Summary

As companies develop new devices that take advantage of the IoT, they must ask what memory technology is best suited for their design, from the endpoint to the data center and everywhere in between. IoT innovations are happening fast and memory technologies are playing a vital role in building the ever-more connected world.

References:

RFID Journal, “That ‘Internet of Things’ Thing,” by Kevin Ashton, June 22, 2009

Gartner, “Forecast: The Internet of Things, Worldwide, 2013,” by Staff, December 12, 2013

ABI Research, Internet of Everything Research Service, by Staff, May 9, 2013