Download this article in PDF format.

As the internet of medical devices grows exponentially, much of the attention has been given to the safety aspect, with less time devoted to protecting the private information transmitted and stored on these devices. However, that imbalance is shifting, as protecting patient data has become a critical concern. In fact, a KPMG 2015 healthcare and cybersecurity survey found that more than 80% of health plans and healthcare providers acknowledged that patient data had been compromised—even after making significant cyber-related investments.

This article discusses key security-related procedures developers need to adopt during the design process to harden the device, secure communications, and prevent malicious exploits. By implementing just a few of these precautions, software developers can design state-of-the-art connected medical devices that protect patient data and are safe for all to use (Fig. 1).

In these days of Internet of Things (IoT) proliferation, medical-device manufacturers are working hard to simultaneously address three trends:

1. The integration of multicore SoCs into their designs.

2. Adoption of Linux as an operating system.

3. Meeting safety and security requirements brought on by the IoT.

Integration of Multicore SoCs

Many devices on the market today include both single-core and multicore processor architectures. Single-core designs ruled the day when embedded devices were purpose-built. With the advent of graphical user interfaces (GUIs), graphics processing units (GPUs), faster and more powerful silicon, and the need for a system to process multiple tasks simultaneously, hardware designers have made the transition to multicore designs.

When designing a medical IoT device, care must be taken to select the right silicon. With tight development cycles compressing the limited available time dedicated to security features, processors with built-in security IP blocks establish a solid foundation. Today’s system-on-chip (SoC) processors comprise the hardware features to authenticate software prior to execution, encode data-at-rest, sign data to maintain integrity, and partition the device to prevent malware from entering the system. Processor security features to look for include secure boot, boot fuses, crypto engines, and device partitioning.

More Linux-Based Devices

With more platforms based on multicore SoCs, software developers are now able to follow the trend of the general embedded market by adopting Linux as the primary operating system. Linux is already used in a wide range of medical devices, from vital sign monitors and hospital bedside monitoring systems to complex imaging equipment.

A few reasons why Linux is gaining in popularity:

• The innovation and maturity of Linux has made it a practical choice in medical-device development.

• Free distributions are available, and they can be modified and redistributed under the GNU General Public License (GPL) and other licenses.

• Linux has been widely adopted by thousands of developers, making it easier to find developers who frequently use operating system and intimately know it.

• Linux has a large ecosystem of semiconductor, board, and software providers who use proven toolchains and application programming interfaces (APIs).

• Linux is feature-rich, including tools, connectivity, security, and graphics — important for device UIs that require clarity and readability.

Medical devices are certified as a system by the U.S. FDA. The challenge is to design a system that can leverage Linux while meeting safety requirements.

With Linux being adopted more widely, the approach starting to take root is Software of Unknown Provenance (SOUP), or off-the-shelf (OTS) software. As such, in most of the devices, Linux is running alongside an operating system that has been certified to the IEC 62304 standard tasked with safety-related operations.

Safety-certified operating systems isolated from Linux help avoid situations that might result from software faults that could cascade across the system and result in security vulnerabilities in unrelated sections of code. For example, Nucleus SafetyCert RTOS from Mentor Graphics allows developers to shorten the path to regulatory certification with a certified solution that includes all necessary documentation to develop mission-critical applications.

IoT Connectivity

Connectivity and IoT requirements are making these multicore and multi-OS systems, in which one of the OSs is Linux, more easily accessible to outside actors. Security through connectivity should be everyone’s concern. All of the trends are bringing safety and security concerns to the forefront, and in some instances, slowing down the internet-of-medical-device adoption.

But there are ways to turn these challenges around.

What’s Your Surface Area?

When addressing how to secure a medical IoT connected device, it’s important to look at the “surface area” vulnerable to attacks. The area of attack varies from device to device, but generally, the more sophisticated the device, the greater the area of attack. It’s also important to understand that most of the threats today target data not for the sake of data, but for the ability to manipulate the data.

One example of manipulating data would be an attack on an algorithm that affects the operation of the very system it depends on for operation, such as a banking application at an ATM terminal or a point-of-sale device at a retail check-out. Within the medical field, an example might be corruption of the sensor information that’s used to determine the patient’s vital information. When it comes to protecting data, developers need to be aware of three critical stages: data at rest, data in use, and data in transit.

Let’s look at how developers can secure data in these three stages.

Data-at-Rest Protection Requirements

These requirements address the state of a device from being powered down to fully operational in the “on” mode. Issues to consider in this stage might include:

Secure storage

Where is the bootable image stored? Is it on a media that could be encrypted? Are there anti-tampering methods supported by hardware to inform the device, and if it’s being tampered with, is there a way to prevent it from booting into a vulnerable state? Have the executables been encrypted, or could anyone who gains access remove EEPROM, dump the memory, or attempt to reverse-engineer the application?

Root of trust

Is the SoC used in the hardware platform capable enough to provide root of trust? If not, does the hardware platform include a secure element that would offer similar capabilities in SoC fashion? Even if the answer is no to both of these questions, there are security vendors who offer purely software-based root of trust solutions.

Chain of trust

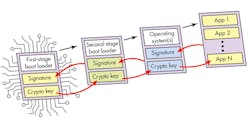

With the root of trust present (Fig. 2), the boot process establishes a chain of trust:

• Has the hardware validated and authenticated the bootloader?

• Has the bootloader validated and authenticated the operating system or other systems?

• Have the operating systems validated and authenticated application code?

• Has the validation and authentication been done by the hardware or software, and is this different at each stage?

By utilizing the technologies listed above, you can be assured that your device will be running authentic and unaltered application code—with an extremely low risk of the device being hijacked.

Data-in-Use Protection Requirements

These requirements are applicable to a device that’s operating normally, with the data being generated and processed. A few technologies to utilize at this stage might include:

Hardware-enforced isolation

Some software developers physically isolate applications by placing multiple SoCs side by side. Not everyone can afford to do this, so for the rest of us, the next best thing is to use ARM TrustZone. ARM TrustZone technology implemented in a SoC can be leveraged to address the network, application, and data aspects of the layered security model.

ARM TrustZone architecture provides a solution that’s able to carve out or partition a hardware subset of the full SoC. It does this by defining processors, peripherals, memory addresses, and even areas of L2 cache to run as “secure” or “non-secure” hardware. A SoC that utilizes TrustZone technology has the ability to dynamically expose the full SoC to secure software, or else expose a subset of that SoC to normal software.

The normal world (non-secure world) created and enforced by TrustZone is typically a defined hardware subset of the SoC. TrustZone ensures that a non-secure processor can access only non-secure resources and receive only non-secure interrupts.

For example, a normal world hardware subset might include the UART, Ethernet, and USB interface, but exclude controller-area-network (CAN) access. The CAN might instead be dedicated to the secure world, where a separate RTOS or application runs for the sole purpose of managing CAN traffic, independent of the normal world software stack. In this example, one can run Linux with an internet connection and be certain that even if someone hacks and brings it down, the communication via CAN with the off-the-chip/board unit that might be attached to the patient will not be jeopardized.

Unlike the hardware subset that runs normal world software, software running within the secure world has complete access to all of the SoC hardware. Thus, from the perspective of the secure software’s execution, the system looks and acts nearly identical to what would be seen on a processor that doesn’t have TrustZone. This means that secure software has access to all resources associated with both the secure and normal worlds. As such, updating software in the normal world via code executing in the secure world is very easy.

Software-enforced separation

If the hardware separation isn’t an option, the next best thing is to use the software to isolate and protect applications. In the past, particularly on single-core SoCs, some designs utilized the concept of a separation kernel. These days, with multicore SoCs supporting virtualization extensions in the silicon, more designs are utilizing embedded hypervisors.

Hypervisors allow multiple instances of the same or different operating systems to execute on the same SoC as a virtual machine. Each virtual machine can be isolated, and through use of a system memory management unit (MMU), it’s possible to virtualize other bus masters. This separation can be used to protect and secure resources and assets in one virtual machine from other virtual machines.

To fit the real-time and performance demands of medical devices, embedded virtualization places certain requirements on a hypervisor design. First, any hypervisor will not do—it has to be Type-1 (bare metal) hypervisor supporting hardware virtualization extensions with a minimal footprint and available in source code. These requirements seem obvious to some: The smaller the code, the less performance degradation one would expect when moving from native to virtualized execution.

In addition, the better support for virtualization in the SoC, assuming that there’s support for the hypervisor, the less code will be required to support the same features in the software, and the faster things will run (aka hardware offload). Finally, most embedded designs are custom. No two designs are alike, even if they use the same underlying SoC, board, and guest OSs. The ability to tinker with source files to optimize the hypervisor performance and behavior to better fit a particular SoC/board/design is crucial to your success.

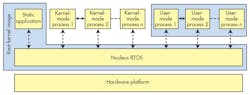

User-space isolation

Many operating systems today offer some type of MMU-enforced isolation of the application code running in the RAM; Linux has user space, and Nucleus RTOS has process model (Fig. 3). The idea here is that while the kernel of the operating system runs at a more privileged level, such as EL1 or EL2, applications run in the EL0 and use various memory-isolation techniques to protect code and data on a per application basis.

Information or data obfuscation

While most developers are careful about hiding and encrypting the password, care must also be taken to obfuscate variables and text strings stored in the memory or storage. This will make it more difficult for bad actors to modify variables and text strings, or even re-engineer the device operation.

In addition to the aforementioned isolation techniques, realize that these devices are connected to many instances to the outside world and one must be mindful to secure all connections. As such, the least a developer could do is enable and configure a firewall, and utilize various publicly available tools to perform network stress testing and network penetration analysis.

Data-in-Transit Protection Requirements

Data in transit relates to data entering or leaving a device while the device is “on.” A good design should always address the following two areas.

• Prior to sending the data, will the device utilize any mutual attestation? Various tricks and techniques could be deployed to authenticate the receiver of the information prior to sending it.

• How is the data protected in the event the device is hijacked? Encrypting the data while at rest and during transit provides some level of protection. For efficient cryptography operations, SoCs with crypto engines should be considered. The crypto engine is a self-contained module designed to offload the encryption/decryption work from the core processor. Because the crypto engine is a self-contained IP block, hackers can find it difficult to derive techniques to gain access to the encryption process. The use of crypto engines can have a huge impact on an application’s ability to secure data quickly and efficiently.

The Rest of the Story

Now that we’ve outlined some of the technologies that medical-device manufacturers should consider, we can’t skip mentioning the process itself. Recently, while working on a highly secure piece of software, the author of this article followed the widely accepted Security Development Lifecycle (SDL).

Some might find the SDL process a bit heavy and involved. However, developers should take the proper care in selecting the correct tools to ensure the traceability of requirements, code, and test cases.

It’s also important to use appropriate tools for the verification stage of the development process. The verification and validation could be as simple as using static-code-analysis tools for compliance to MISRA (safety) or Cert C (security). With enhanced connectivity options, it’s also important to perform network stress testing and employ third-party testing and certification, such as Achilles certification offered by Wurldtech, a Mentor Graphics partner.

Once the development is completed and devices are shipped, one has to determine the remediation strategy. This might be the deciding factor in using a commercial Linux offering like Mentor Embedded Linux. Mentor Embedded Linux customers will have a Mentor security team proactively monitoring the US-Cert website to identify Common Vulnerabilities and Exposures (CVE) that affect their products. If a vulnerability is discovered, Mentor will notify customers with patches to the affected products according to the product support policy. It’s then up to the device manufacturer to apply the patch to the code base used in the product, and if needed, roll out the software update.

Summary

Security for medical IoT devices is a complex subject. Designing an embedded system from the ground up requires many of the proven security capabilities used for years by other electronic system designs—secure boot, code authentication, and chain of trust, to name a few. These are fundamental security capabilities that every connected device needs to include in some shape or form.

Further, as these devices blend seamlessly into our daily lives, it’s incumbent on software developers to design each new device with security as a paramount concern. Through the use of ARM’s TrustZone technology, together with a Type-1 hypervisor, developers can provide a strong, robust, and secure base for SoC designs that meet the demands of our ever-expanding IoT world.