This file type includes high resolution graphics and schematics when applicable.

With over 200 billion of semiconductor devices expected to ship in 2015, making sure they’re all acceptable for their target market creates an interesting challenge for test and quality-control engineers. Smart design and test teams have employed steadily improving real-time data analysis to help balance quality and quantity. Now, “big-data” analysis is turning its focus to systems by tracking components to ensure optimum performance matching, while also accelerating the system debug process.

This article will look at how big data is solving problems all along the supply chain, and how system developers can incorporate the newly evolved system application of big-data analysis to improve performance and lower overall cost.

In the mid-2000s, semiconductor companies began to examine their manufacturing test processes to look for new ways to effectively capture timely, actionable data from their test operations. Many of these forward-looking companies realized that the ability to collect, detect, and analyze manufacturing test data in real-time could positively impact product management in the supply chain, thereby significantly limiting test escapes while providing valuable test DNA for every device processed on the manufacturing floor.

Data is Good, So What’s the Hold Up?

Numerous success stories demonstrate how “big-data analytics” can drive improvements directly to the bottom line. Still, three main hurdles must be dealt with when it comes to implementing real-time big-data solutions for semiconductor manufacturing operations:

1. Why fix it if it isn’t broken?

For 50+ years, semiconductor companies lived by the mantra that tester data was always, or almost always, correct. They didn’t believe that there was any significant return for the effort to challenge their tester data.

After all, the semiconductor industry has been wildly successful in both design and manufacturing. And with many products yielding at more than 90% in high-volume production, the prevailing opinion is that this is “good enough.” But if your competitors are manufacturing their products with up to 2% more yield for the same (or even less effort) and with lower defective parts per million (DPPM) rates, the status quo becomes untenable.

2. Creates more work for the operations team

There’s a preconceived notion that more data always means more work for the operations team. In reality, big-data solutions actually speed up analysis and deliver multiple benefits to semiconductor manufacturing organizations by removing painstaking and error-prone data collection. This, in turn, frees up product and yield engineers to focus on what is really important: Finding the opportunities that will proactively improve product yield and quality.

3. Big-data solutions will eliminate my job

Many engineers feel that new technologies or efficiencies may reduce or eliminate their jobs, so they’re hesitant to recommend any new tools or processes. However, what operations engineers find out is that big-data analytics tools make them more productive, and empowers them to leverage their knowledge and expertise to solve new and more challenging problems. Thus, they become even more valuable to their organizations.

How Data Solves Problems

Semiconductor manufacturing organizations collect terabytes of data each month from across their global supply chain. For those that made the investment in real-time big data analytics, actionable intelligence is now available to make informed decisions that directly impact the bottom line. One example is the preservation of yield entitlement through the analysis of site-to-site tester issues.

When a device is in high-volume production, many factors can negatively affect yield. The challenge is to correct those issues as quickly as possible. One such issue that contributes to yield loss is a bad or failing probe card for a particular test site. In many instances, it can be hours (or longer) before the problem is detected and corrected. If the yield for that test site dropped from 95% to 85%, then 10% of the devices labeled as “bad” were actually “good” over that period of time, resulting in fewer devices to sell and lower product revenue.

However, by using big-data analytics with data pulled in real-time from the factory floor, that drop in yield can be automatically detected within minutes. In turn, the appropriate engineers, technicians, or systems along the chain can be alerted to quickly take action to maintain the expected product yield. Either way, the problem needs to be addressed; but with big data analytics, the problem can be identified and resolved more quickly.

Collecting data uniformly through the distributed supply chain enables manufacturing operations to optimize all of the data points in wafer manufacturing. Often times, wafer manufacturing, wafer sort, and final test are processed in various countries with different subcontractors (subcons), who may have different systems and processes. Centralizing all manufacturing data and running it through appropriate analytics provides insight and history into every single chip that’s manufactured by the semiconductor company, regardless of its origins or development path.

A case in point involved the diagnosis of a very rare failure that was not identified until the packaged device was in the hands of an electronics-manufacturing-service (EMS) provider. The problem was initially identified by a high rate of DPPM. This would usually result in the defective parts being shipped back to the customer and a failure-analysis team being tasked with determining the root cause.

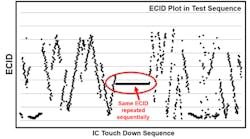

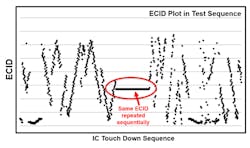

The process would typically take seven days. But in this instance, a single product engineer, using appropriate tools to analyze the manufacturing data, was able to identify the problem in 15 minutes (it was duplicate electronic chip IDs, or ECIDs), diagnose the root cause in 1 hour (piggybacked die during final test), and perform a complete risk assessment for the entire product run (over four million devices) in less than a day (Fig. 1). All this despite being located more than 6000 miles from the EMS provider. This was all possible because the engineer had access to the entire test history for the product in question, from wafer sort through final test, and was able to query that historical data to search for, find, and confirm the cause of the problem.

Another benefit of big data is that other business units can find value by accessing the same real-time data. Operations managers, finance teams, and product planners now can take full advantage of a consistent, complete, and accurate dataset and use it in their daily tasks to improve overall business operations (Fig. 2).

In short, quality, centralized data improves the overall view for every department in the organization and enables better decisions to be made across the entire company.

Data Analysis for Systems Designers

Many parallels exist among the big-data challenges faced by semiconductors in the world of electronics. First of all, electronic systems are based heavily on semiconductor content. Secondly, compared to the semiconductor supply chain, the electronics supply chain is even more diverse and complex. It’s not uncommon to have multiple vendors supply scores of different parts for a single electronic system. Every single part must be tested and must work together. While building and testing an electronic system, numerous errors can crop up that may be difficult for engineers to identify and correct.

Big-data solutions can help engineers get closer to the root cause of the problem by collecting data on every part used in that system. While a part may work perfectly in one system, the same part paired with a different performance part may not work properly in a different system configuration.

Similar to the semiconductor world, big-data solutions can provide engineers with comprehensive device data that enables design teams to more easily isolate problems and find the root cause of a problem more quickly. Without a comprehensive big-data solution in place, electronics-manufacturing operations teams would need to spend significant time and effort just to collect enough information to be able to consistently replicate the problem to begin debugging. Electronics companies deploying big-data solutions are solving their product integration problems up to 95% faster.

What to Look for in a Big-Data Analytics Solution

Whether an organization is implementing a new big-data solution or working with a partner, it’s wise to evaluate these five key factors:

1. Enterprise-wide solution: Engineering teams should implement solutions that provide a complete view into subcons and a clear picture of what’s happening at every endpoint in the global supply chain. These solutions must be enterprise-wide in order to collect data, detect abnormalities, and analyze results.

2. Dedicated resources: It’s crucial to have dedicated resources connected to all of the testers on the factory side. Thus, they can collect and verify the data in real-time to ensure that the data collected is complete prior to data analytics.

3. Adequate storage: A big-data analytics tool must feed into a data-storage solution that’s able to handle the storage and rapid access of terabytes of data each month.

4. Automated rules-based algorithms: It’s critical to have an automated rules-based analytics system in place that can detect issues 24/7 and help narrow down the scope of the problem, as well as assist engineers to identify the root cause of any manufacturing issues.

5. Sophisticated analytic and reporting tools: Manufacturing data is important to more than just engineering operations. Any big-data solution must also provide sophisticated analytic and reporting tools to enable decision-makers across the company to see an end-to-end view of their products.

Whether a semiconductor or electronics company needs to improve yield, quality, or productivity, or quickly identify the root cause of a manufacturing problem, big-data solutions can help address the problems of data collection, detection, and action to provide increased ROI. Many of the world’s largest integrated device manufacturers (IDMs) and fabless semiconductor companies are deploying big-data analytics solutions to effectively help them collect and analyze their manufacturing.

Such analysis preserves the engineering and development expertise you’ve put into designing and producing the best chips or systems. And at the same time, it keeps the front office happy by boosting profit margins and market share.

David Park, Vice President of Worldwide Marketing for Optimal+, has over 20 years of experience in design and manufacturing software for the semiconductor and electronics market segments. Prior to joining the Optimal+ team in 2013, David held senior management positions in multiple high-technology companies, including Synopsys, Cadence Design Systems, and C Level Design.