Download this article in .PDF format

This file type includes high resolution graphics and schematics when applicable.

What will soon be one of the most sophisticated robots around? Your trusty automobile. While there won’t be a bipedal robot replacing the human driver, the car itself will be the robot, sporting more sensors and software to provide a safe driving experience. Though expected for self-driving cars, this technology is also finding its way into the more conventional vehicles of today and tomorrow. It will help provide a safer driving environment even when a person is driving the vehicle.

Research on self-driving cars and augmented driving is going on everywhere, at companies ranging from Google to Ford (Fig. 1). Toyota is dropping $1 billion over five years to help foster artificial intelligence (AI) research for self-driving cars.

Robotic cars will not have a pair of Mark 1 eyeballs. Instead they will have different sensors arrayed about the car from lasers and radar to ultrasonic and visual sensors. This advanced environmental awareness will be fend to simultaneous localization and mapping (SLAM) software and utilized by AI applications to analyze and act on this information. There will be multiple computers and cores handling all this information, pushing automotive platforms into the high-performance embedded computing (HPEC) arena.

Sensors are not the only way cars will be getting information about their environment. We already have GPS for mapping applications, but vehicle-to-vehicle (V2V) and vehicle-to-infrastructure (V2I)—collectively known as V2X—is coming into play, as well. This requires a cooperative infrastructure that is costly to implement and change, so it needs to be done as flexibly and correctly as possible the first time.

Advanced Environmental Awareness

The challenge for automotive sensing systems is the range of environments a car encounters. It is easy to garner information about the environment when nothing is moving on a clear day or night, but add dozens of moving objects, rain, fog, glare, and other problems, and the job becomes much more difficult.

No single sensor can address all situations, which is why a variety of sensors are being employed. There tends to be overlap in range and performance, with each sensor being optimized for a particular aspect of the application. Sensor integration—a common term with regard to smartphones and other Internet of Things (IoT) devices—is something automotive sensors are being tied into, but the problem is much more complex. The sensor information is often used for building up a view of the surrounding environment, not just the operation of the device itself.

Nearby objects need to be tracked and decisions need to be made based upon a wide variety of inputs. The problems are actually more complex than those encountered by fighter aircraft, which already perform many of these functions (e.g., tracking objects).

This is where SLAM comes into play. The challenge is dealing with the large amounts of information from the growing array of sensors and incorporating them into a changing 3D map. Complex algorithms such as Extended Kalman Filters (EKF) are being employed, which have been used in robotics research for decades.

SLAM needs to track a variety of objects from stationary landmarks to moving objects. The systems need to deal with data that is incomplete, having limited accuracy and possibly a wide margin of error. The systems also need to deal with uncertainty because objects will be occluded, and an object’s make-up can affect the results from the sensors. For example, reflectivity can affect the results of most sensors, albeit to different degrees. This is why a variety of sensors can help improve the overall analysis.

Automotive Ethernet is helping tie together multiple sensors such as cameras. Technologies like this are important because the resulting system cannot be big, bulky, hard-to-maintain, or expensive. The 360-deg., birds-eye, surround view is done by knitting together the output from four cameras with fisheye lenses.

Artificial intelligence (like the cloud and IoT) comes in many forms, from rule-based systems to neural networks to deep learning applications. Various forms of AI will be employed for self-driving cars, but also for Advanced Driver Assistance Systems (ADAS) that are being deployed now. The ADAS systems are typically providing warnings such as lane-change systems and back-up where the driver has to handle actual collision avoidance.

Infrastructure Awareness

Creating a standalone system would be complex enough, but adding cooperative, wireless communication to the mix can simplify the job. This is where V2X (Fig. 2) comes in. This technology uses wireless communication between a vehicle and the surrounding infrastructure, as well as nearby vehicles equipped with V2X support.

With V2X, the position and movement information of a vehicle obtained from GPS and other location technology is shared with nearby vehicles. This information can also be shared with the infrastructure, allowing for optimizations such as time of stop lights and control of the vehicles. For ADAS applications, this could be notifying the driver of a change in the upcoming traffic light, as well as oncoming cross traffic that might not be visible to a vehicle. This information could also be used help alleviate traffic congestion.

Companies like Savari are developing and testing the sensors and communication support for V2X. There have been a number of voluntary deployments and pilot projects to show the viability of V2X, in addition to finding issues that need to be addressed.

V2X will be most useful when all vehicles are equipped with the technology, but there are benefits even when a subset of vehicles and infrastructure is in place. Vehicles with V2X will have the advantage, but everyone will benefit if the infrastructure components provide advantages such as congestion notifications. Some of this is already in place, using smartphones to track people in vehicles to generate maps with congestion information.

Automotive Processing

V2X tends to be a more straightforward communication and computation application, but sensor analysis, SLAM, and AI support are placing heavy demands on processing power with the car. There are a variety of solutions being delivered and often multiple platforms are being utilized in a distributed fashion.

For example, Renesas’ R-CAR H3 family is built around a multicore, 64-bit ARM Cortex-A57/53 system and Imagination’s PowerVR GX6650 as the 3-D graphics engine. That tends to be a typical platform, but dual lock-step Cortex-R7 and IMP-X5 parallel programmable engines set it apart. The IMP-X5 delivers advanced image recognition support. The H3 can be used to generate 360-deg. views from multiple cameras, as well as perform image analysis to identify objects and people. QNX Software Systems’ QNX CAR supports the H3, providing ADAS support.

The Renesas ADAS Surround View Kit (Fig. 3) is an example of the R-CAR platform in action. It has four IMI Tech cameras, and the open-source software combines the output into a single 360-deg. view.

NVidia's Jetson TX1 and Drive PX 2 are platforms targeting automotive applications. The Jetson TX1 is also built around a multicore Cortex-A57/53 system but it pairs the cores with a heftier GPU. The Maxwell GPU has 256 cores delivering over 1 TFLOPS of computing power that can be targeted at applications like deep learning using software like Nvidia’s CUDA Deep Neural Network (cuDNN) library. The Drive PX 2 module provides even more computing power. It has 12 cores and delivers 8 TFLOPS using two Tegra and two discrete GPU chips.

Deep learning is a class of machine learning technologies, also known as deep structured or hierarchical learning. It is designed to operate on large datasets and tends to be computationally intensive. It can employ other technologies such as neural networks and has been applied to a range of fields, such as computer vision and natural language processing.

There are a number deep learning frameworks being developed. Caffe, for one, was developed at the Berkeley Vision and Learning Center (BVLC). It can process more than 60 million images per day running on an NVidia K40 GPU. Inference takes 1 ms per image, while learning takes 4 ms per image.

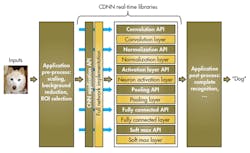

Not all deep learning applications require hefty floating point support. CEVA’s Deep Neural Networks (DNNs) framework (Fig. 4) runs on the CEVA-XM4. Developers start by building Caffe applications, which the software then converts into fixed-point support provided by the CEVA-XM4. The framework is distributed so a host processor can handle part of the workload.

We have come a long way from a car telling us that “A door is ajar” to providing warnings about lane changing and adjacent vehicles. There is much more to come—and a lot of work still to be done—for regarding applications that are needed to meet safety demands like ISO 26262 (ASIL-B). New cars will need to become robotic supercomputers to meet these challenges.

Looking for parts? Check out SourceESB.

This file type includes high resolution graphics and schematics when applicable.