Dataflow Processor Serves Up High-End Low Latency

Running machine-learning (ML) neural networks at the edge has two prerequisites: high performance and low-power requirements. Deep Vision’s ARA-1 polymorphic dataflow architecture is designed to meet those needs. It typically requires less than 2 W of power while delivering impressive, low-latency operations by minimizing data movement within the chip. It targets applications on the edge, where all of the processing is done locally instead of shipping data to the cloud for processing.

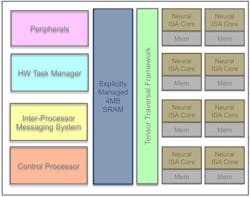

The dataflow architecture is built around multiple neural cores (see figure) linked to an explicitly managed memory and a hardware task manager. The neural cores run a custom instruction set that’s designed for data reuse to minimize data movement.

The architecture is fully programmable, although most developers will use Deep Vision’s compiler to map their models to the system. The compiler detects multiple dataflow patterns in each layer of an ML model and maps those to the cores. A Tensor Traversal Engine coordinates the chip resources to optimize system utilization.

The ARA-1 is a general-purpose platform, but it’s optimized for vision applications. In particular, Deep Vision targets camera-based ML. One use case is automotive in-cabin monitoring. Another is smart retail, such as food stores that track inventory.

The Deep Vision compiler supports the popular frameworks. This includes TensorFlow, Caffe2, PyTorch, and Mxnet, as well as interchanges like ONNX. The toolset includes a bit-accurate simulator, a profiler with layer-wise statistics, and a power utilization optimizer.

The chip is available alone or in different form factors. These include a USB device or an M.2 module with a single ARA-1. A U.2 PCI Express module is available with up to four ARA-1 processors on-board. The U.2 module is hot-swappable and designed for use in edge servers.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.