BrainChip has revealed the architecture for its Akida Neuromorphic System-on-Chip (NSoC), which is based on spiking-neural-network (SNN) technology. The self-contained processing system, designed for embedded applications, could also act as a coprocessor. Multiple chips can be combined to handle larger SNNs as well as multiple SNNs.

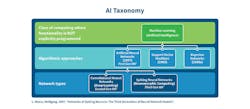

Spiking neural networks are an alternative to convolutional neural networks (CNNs) that have become very popular in recent years (Fig. 1). SNNs differ from CNNs in their operation, training, and inference-process functionality. At a high level, though, they are very similar. A trained inference engine can identify inputs and provide outputs that indicate the characteristics configured as part of the network model. Outputs are probabilities, but a high probability is a good indication of proper identification.

1. Spiking neural networks offer an alternative to convolutional neural networks that have become very popular.

CNNs and SNNs have an input layer and an output layer with one or more hidden layers in between. A CNN accepts inputs and the data flows through the network being modified by the weights associated with the neurons within the layers. The weights are determined by training the model. This can take some time and require a lot of samples.

SNNs translate data into a stream of spikes that also flow through the neural network. These are discrete events rather than the CNN’s array of values. Differential equations actually define how the spikes operate. One of the requirements of an SNN is the translation of input data into spike streams. The data-to-spike converters can be done in hardware or software.

SNNs also require training but the overhead is significantly less, allowing for in-field training that would be impractical for a CNN. SNNs also require less computational muscle compared to a CNN that helps reduce the power and performance requirements compared to a CNN.

Closer Look at the NSoC

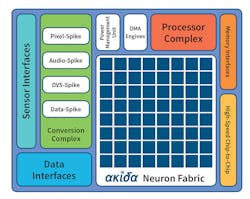

The BrainChip’s Akida NSoC (Fig. 2) includes a conventional processor, enabling the system to be used as a standalone device. It can handle peripherals and communications, but the rest of the chip is dedicated to SNN support. Communication interfaces include PCI Express, USB 3.0, Ethernet, CAN, and serial ports. The Akida NSoC essentially has 1.2 million neurons and 10 billion synapses. The chip can handle training as well as inference chores.

2. BrainChip’s Akida NSoC is a self-contained chip with a conventional processor plus the Akida neuron fabric.

The system supports a range of sensor inputs including analog, digital, audio, pixel, and dynamic-vision sensors (DVS). The latter is a camera that only sends changes that occur in a frame versus the entire frame. This is handy for identifying objects and gestures. The NSoC’s DMA engine is designed to handle these inputs that supply the data-to-spike conversions (DSCs) that in turn deliver a stream of spikes stored by the SNN models in the neural network fabric. A number of common DSCs are built into the hardware, including support for graphical pixel data, audio data, and DVS data.

A high-speed serial, chip-to-chip interface can be used to link up to 1024 chips together in a larger network. Data and spikes flow through these connections, so each chip only needs about half-a-dozen serial links. Additional switches aren’t required. There’s a unified address system within a multichip complex.

Though pricing and final configurations haven’t been set, the cost per chip is on the order of $10 in quantity. Likewise, the power requirements are on the order of a conventional SoC, allowing SNNs to operate on the edge in an industrial Internet of Things (IIoT) node or as standalone systems.

BrainChip focused on a range of design aspects when creating its architecture. The fixed neuron model allows for more compact memory—on the order of 6 MB—as well as the use of programmable training and firing thresholds. The neural processor cores, which have been optimized to perform convolutions, are fully connected via the fabric. A global spike bus connects to all of the cores.

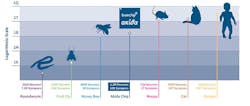

3. The Akida NSoC is moving up the ladder in terms of complexity with 1.2 million neurons and 10 billion synapses in a multichip system.

Neural networks have come a long way, but there’s still lots of room for improvement (Fig. 3). Nevertheless, significant applications have now become practical because of them. Neuromorphic computing using SSNs target applications like vision systems, cybersecurity, and even financial systems. Multiple chips are needed to hit the 1.2 billion neuron mark, but a single chip is sufficient to handle many SNN chores such as video processing.

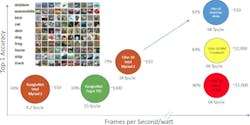

The chips aren’t available yet; however, testing has been done using simulation. It shows that the chip will fare very well with common datasets like Cifar-10, which is a common test of neural-network hardware and software (Fig. 4). The chip can process the Cifar-10 model at 6 kframe/s/W.

4. The Akida does very well with the popular Cifar-10 dataset. The Cifar-10, which identifies 10 common objects, uses less power and is significantly less costly.

The chip is supported by BrainChip’s Akida Development Environment. The Python-based platform can be used to generate models for software platforms like the Akida Execution Engine as well as the Akida SoC. The system will handle supervised and unsupervised learning.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.