Multi-Terabit Interconnect Solutions for AI/ML Data Centers

What you’ll learn:

- How data centers are evolving to meet the challenges of AI/ML computing.

- Pros and cons of common copper and optical interconnect solutions.

- Benefits of RF transmission over plastic cable (e-Tube) for multi-terabit speeds.

Even before the emergence of AI, a cascade of digital innovations, from the IoT to cloud computing, generated unprecedented demand for data-center services. On top of that demand, there’s now an insatiable appetite for generative AI, large language models (LLMs), and other power-hungry, AI-related workloads. As such, artificial-intelligence/machine-learning (AI/ML) data centers are rapidly transitioning to multi-terabit network transmission speeds to keep pace with the escalating compute resource required for the AI-related workloads.

While today’s data centers largely rely on 400-Gb Ethernet (400G) network devices, by 2025, Dell’Oro Group predicts that most AI backend ports will be 800G.1 And by 2027, most AI GPU clusters are expected to be 1.6T. Higher-speed networking technologies consume more power and generate more heat, leading data-center operators to seek out more energy-efficient, low-cost infrastructure solutions.

One area of innovation is in the high-speed interconnects that transfer data, applications, and workloads across AI accelerators, switches, servers, and other components. The traditional options are copper and optical interconnects, each of which provides significant benefits as well as challenges.

A more recent solution is RF transmission over plastic cable, or e-Tube. It provides a compelling third alternative, especially for GPU cluster scale-up in the backend, where terabit-plus speeds will be needed to support AI/ML workloads.

Tradeoffs with Copper and Optical Interconnects

Copper direct attach cables (DACs) have long been the default choice for up to 400G network devices. Copper interconnects are known for being simple, inexpensive, reliable, and a good fit for short-reach applications, such as top-of-rack switch connections. However, the limitations of copper interconnects become evident as network speeds ramp to support 800G and eventually 1.6T Ethernet and beyond.

>>Check out this TechXchange for similar articles and videos

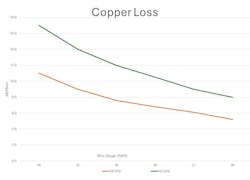

Copper cables suffer significant signal loss (Fig. 1), especially as speeds increase, leading to shorter cable reach. That creates issues even for the short cable lengths required for in-rack interconnect use cases. While technically the cable length could be extended using thicker copper gauges, the DAC itself would then become too thick, heavy, and rigid to be deployed.

Copper has, in short (pun intended), reached its physical limits. Thus, enterprises and hyperscalers have turned to optical interconnects, including active optical cables (AOCs), for many AI-related workloads. Since fiber optics transmit data using light signals, they can transfer data faster and over longer distances than copper, with minimal signal loss. In addition, optical interconnects are significantly thinner and lighter than copper cables.

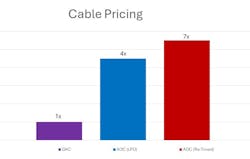

However, optical interconnects are also much more complex, power-hungry, and expensive than copper interconnects because they require costly electrical and optical assembly components for electrical-to-optical conversions. Compared to copper, optical interconnects can be up to 7X more expensive (Fig. 2).

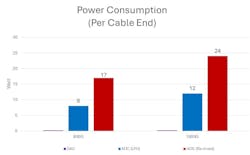

However, they add significant power to operations (Fig. 3). Such cost and power-consumption issues make it impractical to rely entirely on optical solutions for the transition to multi-terabit speeds.

To address some of the concerns with optical interconnects, innovations such as co-packaged optics have emerged that support better energy efficiency and higher density. Unfortunately, co-packaged optics still suffer from the same cost, heat, power, and reliability challenges of traditional optical solutions. While they may provide a workable solution for the mid layer of switches, co-packaged optics remain cost- and power-prohibitive for high-volume in-rack, adjacent-rack, and backplane applications.

e-Tube: A Better Third Alternative for RF Transmission

Given the physical limitations of copper and the power-hungry nature and expense of optical interconnects, there is significant interest in low power, low-latency, and cost-efficient cabling alternatives that will scale to multi-terabits. One such option is e-Tube technology, a scalable interconnect platform using RF data transmission through a plastic dielectric waveguide made of common plastic material.

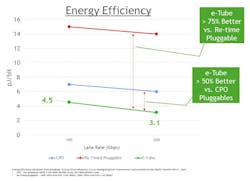

e-Tube cables are 80% lower weight and 50% less bulky than copper cables. They don’t suffer from high-frequency loss like copper, so the same e-Tube core can be used for 1.6T, 3.2T, and even faster future cable speeds. Because e-Tube is an electrical technology that doesn’t require power-hungry and expensive optical assemblies, the cables are roughly 50% more energy-efficient than CPO and roughly 75% more energy-efficient than traditional retimed optics (Fig. 4).

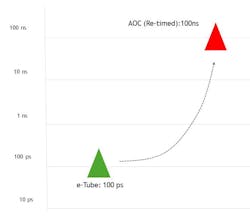

With latency in picoseconds, e-Tube latency is three orders of magnitude better than traditional optical cables (Fig. 5).

For in-rack and adjacent rack communication links, e-Tube cables offer lower loss, longer reach, and more power efficiency—at similar cost—than copper interconnects. Designed and tested according to MSA-defined standards, the cables can be deployed with existing data-center network equipment ecosystem.

e-Tube cables leverage mature semiconductor process technologies and cable manufacturing equipment to minimize capital expenditure for cable makers. With 50% less bulk than copper, the thin e-Tube cables help to eliminate rack congestion and enable the installation to be easily serviced, making them a solid choice for terabit in-rack and adjacent-rack use cases up to 7 meters.

Enterprises and hyperscalers need to weigh all of the advantages and limitations of replacing copper with optical technologies. e-Tube offers a better option for in-rack and adjacent-rack deployments in hyperscale cloud data centers, AI/ML GPU buildouts, and high-performance computing clusters. Although there’s no one-size-fits-all solution, e-Tube offers a promising alternative to other emerging optical interconnect solutions for AI/ML applications in data centers (Fig. 6).

Reference

1. AI Networks for AI Workloads, Dell’Oro Group, 2023; must be purchased.

>>Check out this TechXchange for similar articles and videos.

About the Author

David Kuo

Associate Vice President, Product Marketing and Business Development, Point2 Technology

David Kuo is a semiconductor product marketing and business development executive with over 20 years of experience in the networking, consumer, and mobile markets at companies like Point2 Technology, Mythic, SiBEAM, and Silicon Image. He’s a technology expert with experience in mixed-signal SoCs, connectivity ICs, AI/ML processors/accelerators, software, and tools. David holds a bachelor’s in electrical engineering from the University of Nevada, Reno.