Broadcom Bets on 3.5D Packaging Technology to Build Bigger AI Chips

The semiconductor companies and startups on the front lines of the AI chip market are competing over scale as much as anything else. They’re all racing to roll out giant graphics processing units (GPUs) and other AI chips to handle the types of large language models (LLMs) at the heart of OpenAI’s ChatGPT and other state-of-the-art algorithms, which are becoming more computationally intense and power-hungry to train and run.

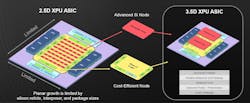

The most advanced AI chips in data centers can no longer fit on one monolithic slab of silicon. Instead, they consist of chiplets lashed together with 2.5D or 3D advanced packaging that get everything to mimic one large chip as much as possible.

Broadcom is trying to build even bigger AI chips with its 3.5D packaging technology that was introduced last month. By stacking accelerator chips with 3D integration before placing them next to each other with 2.5D, the Extreme Dimension System in Package (XDSiP) platform can accommodate more than 6,000 mm2 of silicon in a package. The company said it can put the 3D-stacked accelerators and other chiplets on a silicon interposer before surrounding them with up to 12 high rises of high-bandwidth memory (HBM).

One of the core innovations is that Broadcom uses face-to-face 3D chip-stacking technology based on hybrid bonding, which connects the pillars of copper wiring on the front of each silicon die directly without solder bumps. The new arrangement makes it possible to create thousands of connections per square millimeter, slinging signals between 3D-stacked silicon dies 7X faster than currently possible.

While Broadcom isn’t competing directly with NVIDIA’s GPUs at the heart of the most advanced data centers, it helps build custom accelerator chips—also called XPUs (Fig. 1)—for Google and other tech giants. They’re all building vast clusters of servers that can cost billions of dollars and come with tens of thousands of GPUs and other AI accelerators to train their state-of-the-art models on vast amounts of data. The largest clusters are growing to as many as a million AI accelerators, said Broadcom.

According to the company, a majority of its customers, in what it called the consumer AI space, are working with the XDSiP technology. The first batch of mass-produced 3D-stacked accelerators are expected by early 2026.

The Move to 3.5D Packaging Technology for Multi-Die Chip Designs

As the semiconductor industry falls further behind on Moore’s Law, the usual improvements in power, performance, area, and cost that tend to come with every new process node are waning. To stay a step ahead of the rising computational demands of AI, chip engineers are now moving on from all-in-one monolithic SoCs, which are becoming increasingly costly to build at the most advanced process nodes.

>>Check out this TechXchange for similar articles and videos

Instead, semiconductor companies are breaking apart ever-larger chip designs into several smaller and more modular building blocks that can be reassembled in a system-in-package (SiP) to increase the amount of silicon—and, thus, the number of transistors and logic inside of it. By slicing a heterogeneous SoC into several functional parts, companies can bind them together with 2.5D or 3D packaging technologies that aren’t bound by the physical limits of what can be crammed in a single slab of silicon.

“Advanced packaging is critical for next-generation XPU clusters as we hit the limits of Moore’s Law,” pointed out Frank Ostojic, senior vice president and general manager of the custom ASIC business at Broadcom.

To do the integration, Broadcom said it plans to use TSMC's CoWoS (Chip on Wafer on Substrate) technology for the 2.5D placement of the accelerators and other chips on the package laterally, while using TSMC’s 3D packaging technologies to stack the silicon dies vertically. Widely used in the latest AI accelerators for data centers, CoWoS entails stacking chiplets on a colossal slab of silicon called a silicon interposer. An interposer is constructed with short, dense interconnects that move signals around as if everything is on one large SoC.

In 2.5D, these modular chips are placed on the package with very small balls of solder—called micro bumps, in the parlance of the semiconductor industry—that are densely packed on the surface of the silicon die.

Today, the most advanced AI chips can cram up to 2,500 mm2 of silicon and up to eight HBM when assembled with 2.5D packaging. That’s approximately 3X the amount of silicon in NVIDIA’s current-generation AI chip, the Hopper. The GPU at the heart of it is manufactured as close as possible to the reticle limit, the maximum amount of silicon that can be fabricated on a single chip. It currently comes out to around 800 mm2.

But as AI becomes more computationally intense, companies are running out of real estate. To solve the limitations, the movers and shakers in the semiconductor industry are adding another dimension to these chips by stacking logic chips with hybrid bonding before spreading out all of the components on high-speed interposer circuitry. One of the first AI chips based on 3.5D packaging is AMD’s latest 3D-stacked accelerator chip, the Instinct MI300A, which is becoming one of the biggest rivals to NVIDIA’s GPU-CPU superchips.

Bringing everything even closer together pays dividends in speed, latency, and power. Since constantly slinging signals from one side of the interposer to the other can be relatively power hungry, reducing the distance between the chiplets can yield power savings. Vertically stacking the silicon dies also saves real estate in the package, facilitating the placement of more chiplets and, thus, more transistors in the same area.

Hybrid Bonding: Where It Fits into the Future of 3D Chip Stacking

Broadcom is trying to usher in the next generation of AI superchips with its 3.5D packaging technology.

Before pulling together all of the heterogeneous dies with its XDSiP technology, the company said the process starts by pulling apart every function in the chip design and organizing them into chiplets. The main benefit of the process—also known as system technology co-optimization (STCO)—is that each chiplet can use the fabrication technology that best fits its functionality, giving engineers a lot more flexibility to optimize the power, area, performance, and cost of the chiplets, said Ostojic.

In most cases, Broadcom plans to partition the accelerator cores or other processing units at the heart of the system, depicted in Figure 2 in red, into any number of silicon dies. These logic chips can contain general-purpose CPU cores or high-performance AI accelerators, ranging from GPUs to tensor processing units (TPUs), or other custom IP. When it comes to these chiplets, it’s best to use process technologies on that cutting-edge of Moore’s Law since they handle the most intense computations.

The rest of the logic is relocated on a separate die, visible in yellow in the diagram, that contains everything from the I/O—including PHY-based die-to-die interconnects, high-speed SerDes, and HBM memory interfaces—to the SRAM that acts as the processor’s cache memory. These components rarely get any benefit from moving to the most advanced nodes, so it makes more sense to manufacture them on more mature and affordable process technologies. These functions can also be placed on the same chiplet.

Broadcom uses hybrid bonding to stack the smaller accelerator chips on top of the larger chiplets for connectivity and memory. In general, these chips are stacked on top of each other facing the same direction—also called face-to-back (F2B)—before being bonded together. The chiplets communicate with each other using through-silicon vias (TSVs) that act as elevator shafts inside the 3D stack, ferrying power, signals, and data between them (Fig. 3).

According to the company, it can create more direct die-to-die interconnects by stacking the silicon dies face-to-face (F2F) before bonding them together directly, reducing the distances between the computing, memory, and I/O chips in the package and removing the TSVs between them. The arrangement creates a high-density interconnect that can sling 10X more signals between silicon dies with minimal noise and stronger mechanical robustness. They consume 10X less power than the PHYs that physically link chiplets on the plane of the silicon interposer.

Broadcom said its special approach to custom chip design and the IP inside its 3.5D packaging technology enables the efficient correct-by-construction of all power, clock, and signal interconnects in the 3D stack.

The 3D-stacked accelerator is stacked on the silicon interposer with 2.5D packaging technology before being surrounded by the other chiplets, including the HBM that feeds the accelerator as fast as possible with data.

While the I/O chips under the AI accelerators are all about communicating internally with the HBM and other chiplets in the package, multi-protocol connectivity chiplets could be added that communicate externally with the other accelerators, processors, and memory chips in the server or spread out around the data center. These I/O chips, located on the north and south sides of the package in Figure 2, can come with building blocks of IP for Ethernet, PCI Express (PCIe), and Compute Express Link (CXL).

“By stacking chip components vertically, Broadcom's 3.5D platform enables chip designers to pair the right fabrication processes for each component while shrinking the interposer and package size,” said Ostojic. It thus reduces the risk of warping, which is a huge challenge in chiplet-based designs. Heat created by all of the building blocks can cause different materials in the package to expand at different rates, warping them in ways that may impact the processor’s performance or cause it to malfunction.

Can Broadcom Deal with the Complexities of 3D Chip Packaging?

Building these big AI chips isn’t a trivial task. Broadcom said it’s incorporating innovations in everything from process technology and advanced packaging to design and testing to deal with the immense complexity of these superchips. Significant defects in any one part of the multi-die system can be catastrophic, and as semiconductor companies cram more and more silicon dies in the package, the risks are on the rise. On top of that, pinpointing the root cause of a problem is much more difficult in 3D configurations of chiplets.

While 3D silicon stacking gives engineers more ways to optimize performance, power, area, and other metrics, it opens the door to a wide range of design difficulties. One of the tougher challenges is routing signals rapidly and reliably to all of these building blocks in the package while reducing electromagnetic interference (EMI) and other types of signal noise. Delivering power smoothly and efficiently to everything is also more difficult due to the increasingly complex arrangements of chiplets and the growing power demands of modern AI chips.

Yet another problem relates to thermal management, which means managing the heat caused by cramming all of these chiplets so close together in the package. Though stacking silicon dies keeps everything in close proximity, it becomes trickier to remove the heat between them before it saps the chip’s performance of the processor. What’s more, dissipating heat from any one of the components can negatively impact the thermal situation in the silicon dies stacked on top of or placed underneath it.

While leveraging TSMC’s most advanced process and packaging technologies in XDSiP, Broadcom said it’s also bringing a lot of know-how in designing and testing complex 3D-stacked chips to the table. The company is putting it all to the test with the development of the first XPU based on its F2F 3.5D technology. The chip, comprised of four computer tiles stacked on top of one large I/O chiplet surrounded by six HBM modules, implements TSMC's advanced process nodes and CoWoS technology for 2.5D packaging.

The company is also building the 3.5D packaging technology around industry-standard EDA tools that are getting much better at validating the operation of all components in the package as well as everything in between.

Further out, Fujitsu is adopting Broadcom’s 3.5D packaging technology to build its latest high-performance server CPU called Monaka. The processor will feature 288 Arm-based CPU cores fabricated on TSMC’s N2 node, which will be one of the most—if not the most—advanced process technologies on the market when it enters mass production in 2026. These are then stacked on top of cache memory chiplets based on 5 nm that, in turn, will be placed on a silicon interposer with CoWoS.

The SRAM that serves the processor’s cache memory isn’t scaling at the same pace as the logic at the heart of high-performance chips. Broadcom said it makes more sense—both in terms of cost and complexity—to put the memory and computing into separate chiplets, each fabricated on the best process technology for the job, before binding them together in 3D. Fujitsu plans to introduce Monaka in 2027.

>>Check out this TechXchange for similar articles and videos

About the Author

James Morra

Senior Editor

James Morra is the senior editor for Electronic Design, covering the semiconductor industry and new technology trends, with a focus on power electronics and power management. He also reports on the business behind electrical engineering, including the electronics supply chain. He joined Electronic Design in 2015 and is based in Chicago, Illinois.