Memory-Centric Compute Speeds Searches for Machine-Learning Apps

Compute architectures are changing radically from bringing in memory using CXL on the PCI Express interface to integrating machine-learning and artificial-intelligence (ML/AI) accelerators with processors. Smart storage often combines solid-state disks (SSDs) with FPGAs, but bringing intelligence closer to main memory can provide even more significant performance gains.

I talked with Macronix's Jim Yastic about the company's memory-centric hardware approach that accelerates in-memory search (see video above). Macronix FortiX blends targeted computational capability with storage that typically includes Macronix's NAND and NOR flash memory (Fig. 1). Merging computational features with memory allows for increased parallelism that can significantly boost performance while reducing power requirements since data movement is minimized.

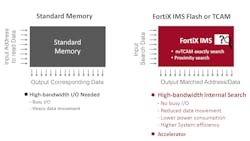

FortiX IMS is designed to handle search chores including those that augment ML/AI applications (Fig. 2). It can be more sophisticated than conventional ternary content-addressable memory (TCAM) that's often found in caching systems such as network switches and serial-attached SCSI (SAS) disk controllers. FortiX IMS can handle proximity searches, not just exact or masked matches.

FortiX 3D memory technology takes advantage of the advances made in 3D memory—Macronix has demonstrated 96-layer 3D NAND and 32-layer 3D NOR solutions. It also can provide the technology for integration in other applications.

On top of that, these systems exhibit significant performance improvements. For example, it can perform a search at 300 Gb/s, compared to 3.2 GB/s that a host could do using DRAM, while only using 300 mW compared to 1 W. And it's accomplished using non-volatile storage. This is a significant factor for many applications in which the data being searched doesn't change much, enabling instant-on operation.