Dealing with AI Disruption

This article is part of the TechXchange: Generating AI.

What you’ll learn:

- Challenges of using generative AI.

- Why generative AI is so disruptive.

- Where generative AI is headed.

Do you like the cute dark furry cat playing with a colorful Rubik’s Cube on the ocean (Fig. 1)? I found it while searching for “AI Playing Cards” at Dreamstime.com, where we get a lot of our stock images. The search engine obviously missed the point of my query, but it was a cute AI-generated image.

Instead of chasing down more card-playing AI images, I tried the description above at Craiyon.com. One of the results was the image shown in Figure 2. Though not necessarily as playful, it’s at least accurate to the description. Both are not too useful in general, unless you happen to be writing an article about generative artificial intelligence (AI) like I’m doing here.

I also tried out OpenAI’s Dall-E 3 (Fig. 3). Dozens of websites and tools like Dall-E and Craiyon out there use generative AI and lots of training material to create images on demand.

I think one thing that’s captured everyone’s interest in generative AI is the ability to utilize it directly with online tools like ChatGPT or github’s Copilot rather than indirectly. Almost everyone who’s on the internet is likely to have used generative AI in some form as it creeps into our search engines, website sales tools, etc.

One interesting aspect about these generated images is that they’re free to use, although most require an annotation of where they were sourced. The other aspect is that right now those images can’t be copyrighted; hence, anyone can copy them.

Choosing a Disruptive AI Model

Generative AI covers a lot of ground, not just image creation. These tools tend to be based on large language models (LLMs) that use a tremendous amount of training material. While much of the input for using these models is text-based, it’s not a requirement. The inputs and outputs and training data can take almost any form, from sensor data to audio and video streams. One almost needs AI tools to discover the AI tools to incorporate in a product or service that utilizes AI or optimizes the product or service using AI models and techniques.

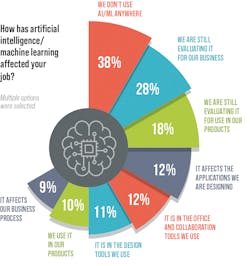

According to our recent Salary Survey results (Fig. 4), AI and machine-learning (ML) tools are only being employed by a small fraction—but it’s growing. While using tools like Dall-E and Midjourney might be easy for anyone, their incorporation into products like self-driving cars or smartwatches is much more challenging and prone to issues that a self-described AI artist doesn’t have to contend with, such as reliability and liability.

Developers must keep one factor in mind: Unlike digital logic, which has a 0 or 1 output, the output from almost any AI model—not just generative AI tools—is full of potentially unknown variations and results aren’t necessarily optimum or even valid all the time.

In some sense, there’s the garbage-in-garbage-out issue. However, it now applies not only to the data coming in and out, but also to the model that’s chosen as well as the training material involved. Though the model and training data may be static, these days the trend is also toward dynamic updates based on new input. This can be a challenge when dealing with stability and reliability issues.

Where is AI Headed?

LLMs are relative. Most are hosted in the cloud or large servers to handle lots of requests, and simply because of the compute requirements. Using the same techniques on the edge is possible—a trend that’s growing. This isn’t being done on conventional processors, but rather on those platforms equipped with hardware AI acceleration.

These days, the challenge is that acceleration hardware is often tuned to particular AI models such as CNN inferencing. This means not all platforms will support all models. Then again, not all applications need to utilize LLMs. Still, AI in some form tends to be more in the mix these days.

Online AI assistants might be the rage and direction of cloud-based services, but it would still be challenging as a standalone edge application. Nonetheless, improved user interface support, including voice activation, is doable today and getting better over time. Touch interfaces have improved, too, and are ubiquitous in embedded applications. Voice and video interfaces are likely to follow a similar path as AI support ramps up and matching hardware support makes low-power operation feasible.

In many ways, AI is like hardware security support. Dedicated hardware security support was almost non-existent many, many years ago, but advances like TPM and secure elements cropped up. Currently, not one new, single application processor that I know isn’t equipped with its own dedicated security processor, secure boot, and hardware encryption acceleration.

AI is Everywhere...Almost

AI acceleration is not as ubiquitous yet, but it’s close. Every major processing chip has AI acceleration of some sort and every flagship microcontroller does as well. By the way, they all feature security enhancements as well.

The challenge with AI is that unlike security systems incorporating standards with known algorithms and implementations, the AI support can be either too general or too specific with tradeoffs in performance, functionality, and power. The AI support for convolutional neural networks (CNNs) and LLMs is similar but not necessarily the same, and the large amounts of hardware required means that utilizing it optimally will be important in terms of performance and power utilization.

Chip and tool vendors were finally getting conventional neural-network support into their hardware and tools, and then along came generative AI. Expect to see more support targeting LLMs in the embedded space. It’s also likely that we’ll see significant optimizations along the way, often in software, that will benefit existing hardware platforms. This has been true for existing AI models and platforms, where improvements have sometimes been an order of magnitude over time.

It’s always important to remember that AI isn’t a solution alone, and not all platforms or services will need or benefit from AI support. Developers should not overlook basics like state machines or rule-based systems just because AI hardware is available or because of the overall “cachet” of AI. An FFT is still better at what it does, but don’t overlook new alternatives.

I’ve seen AI models that have replaced 3D object detection and range determination with a single video camera. Often finding a model, training it, and incorporating it into an application is the easy part. The hard part is determining whether AI is a viable option, what approach to take, and what models may be applicable to the problem.

Read more articles in the TechXchange: Generating AI.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.