Edge AI Unlocks a Safer World with Smarter Video Cameras

This article is part of the TechXchanges: AI on the Edge and Machine Vision.

Members can download this article in PDF format.

What you’ll learn:

- How the rise of smarter camera systems brings a need for greater automation.

- Why advanced AI for smart cameras requires a solution that doesn’t depend on the cloud.

Video cameras are now omnipresent among our surroundings, whether in our doorbells, situated in elevators, or scattered throughout public spaces like airports, stadiums, and city streets. These video cameras are becoming increasingly intelligent with broadened capabilities, from bolstering home security and public safety to monitoring and optimizing traffic flow.

The demand for such camera vision systems, which increasingly “understand” what they see, continues to grow. According to ABI Research, shipments will reach close to 200 million by 2027, generating $35 billion in sales.

As smarter camera systems ramp up, so too does the need for greater automation — the ability to monitor video streams and generate insights more quickly while making streaming and storage more efficient and cost-effective. This is where artificial intelligence (AI) steps in.

However, even AI-supported camera systems have their limits. Traditional AI models rely on cloud-based infrastructure, often suffering from latency issues and other challenges. They’re incapable of real-time insights and alerts, and their dependency on networks jeopardizes reliability and integration with the cloud, which poses data privacy concerns.

Therefore, advanced AI for smart cameras requires a solution that operates independently of the cloud. What’s needed is AI at the network edge. And to truly unlock the potential of edge AI cameras — handling many disparate, essential video functions all on their own — they can’t just be capable of some AI processing. They need to be able to handle lots of AI processing.

Why is Edge AI Essential for Smart Cameras?

Edge AI, in which AI processing takes place directly within cameras, makes it possible to offer real-time video analytics, insights, and alerts, thereby delivering a higher level of security. Furthermore, the implementation of AI at the edge allows for the streaming of metadata and analysis only, as opposed to transmitting entire video streams. This reduces the cost of transferring, processing, and storing video in the cloud. On top of that, edge AI can enhance privacy and reduce reliance on network connections by keeping data localized.

Until now, most smart cameras have been constrained by limited computing power for handling AI processing. They’re also largely incapable of enhancing video on the fly, a crucial component for accurate analytics.

What distinguishes the next generation of smart camera systems is the integration of robust compute power and AI processing capacity directly in the cameras. This not only enables the processing of advanced video analytics, but also applies AI for video enhancement to achieve high-quality video. Given that both functions—enabling advanced video analytics and enhancing video quality—demand their own AI capacity, today’s smart cameras must be equipped with an optimal level of AI power.

High-Quality Video Enhancements Boost Analytical Precision

Though AI is commonly associated with analytics, it can also be used in smart cameras to improve image quality and provide crisp, clear visuals. In public safety situations, the quality of the video image can be paramount in assessing potential risks.

AI is able to effectively manage a variety of image enhancement tasks, including mitigating noise in low-light conditions, performing high-dynamic-range (HDR) processing, and even addressing some aspects of the classic 3A (auto exposure, auto focus, and auto white balance).

Low-light conditions, for instance, can severely limit viewing distance and reduce image quality. The resulting video “noise” makes it challenging to differentiate detail while increasing data size during compression. This could lead to poor system efficiency when transmitting and storing video data in the cloud.

While AI can remove noise and simultaneously preserve essential image details, it also demands significant processing. For example, eliminating noise from a 4K video image captured in low-light conditions would require approximately 100 giga (billion) operations per second (GOPS) per frame, which is 3 tera (trillion) operations per second (TOPS) for real-time video streaming of 30 frames per second (Fig. 1).

And as higher-resolution video streams gain prevalence within smart camera systems, there emerges the need to process larger volumes of data, to detect and identify more intricate and granular objects, and to perform more tasks via sophisticated AI processing pipelines.

Optimizing AI Processing to Maximize Smart-Camera Performance

When a smart camera possesses enough AI capacity, it can simultaneously run advanced video analytics alongside AI-powered video enhancement. It can even manage multiple AI processes on the same video stream, identifying smaller and more distant objects with higher accuracy or accelerating detection at higher resolutions.

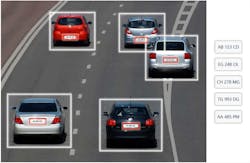

In traffic applications, a smart camera equipped with enough AI processing is able to perform multi-step automatic license-plate recognition. This requires object detection to identify every car on the road plus license-plate detection to find the license plate on every car, plus license-plate recognition to determine the characters in each license plate (Fig. 2).

However, to achieve accurate analytics on high-quality video imagery, smart cameras themselves must have enough AI power to seamlessly handle both video enhancement and analytic tasks in parallel. For instance, to accurately capture a license-plate number within a video stream, the camera’s vision processor needs to employ semantic awareness (understanding what it sees) to selectively clean up and sharpen the parts of the video that contain relevant visual information. In such scenarios, processing demands can accumulate quickly.

For fundamental AI vision tasks like noise reduction, a 2-Mpixel (1080p) camera might require around 0.5 TOPS of processing power. Then, for basic video-analytics pipelines, such as object detection, it would need an additional 1 TOPS. Add to that advanced video-enhancement features, such as HDR or digital zoom, and another 1 TOPS could be required. If you’d like to add facial recognition, count on another 2 TOPS.

When combined, these processing capabilities, which are becoming increasingly standard in the smart-camera systems market, require a minimum of 4.5 TOPS. Thus, to enable edge AI and the type of real-time processing that makes people safer, smart cameras must include AI vision processors capable of at least that much power.

The new generation of camera-attached vision processors, like the Hailo-15 series of AI vision processors, recently announced by Hailo, a leading supplier of edge AI processors, is designed to answer the growing need for high AI capacity within the camera. This range of vision processors offers up to 20 TOPS of AI compute power, which enables both processing of advanced AI analytics and video enhancements (Fig. 3).

The Video + AI Revolution

Ultimately, the integration of AI, such as the Hailo-15 series, into smart cameras is poised to revolutionize numerous industries, including security, industrial automation, retail, and beyond. This paradigm shift in the way we capture, process, and interpret visual information necessitates extending the AI revolution to the network's edge, where it can do the most good—and quickly. Individually, AI and video have already had a profound impact, but together, they have the potential to transform everyday life for the better.

Read more articles in the TechXchanges: AI on the Edge and Machine Vision.

About the Author

Avi Baum

Chief Technology Officer and Co-Founder, Hailo

Avi Baum is Chief Technology Officer and Co-Founder of Hailo, an AI-focused, Israel-based chipmaker that has developed a specialized AI processor for enabling data-center-class performance on edge devices. Baum has over 17 years of experience in system engineering, signal processing, algorithms, and telecommunications, focusing on wireless communication technologies for the past 10 years.