Creating a Smarter World with Embedded AI

AI is everywhere. News feeds are full of stories about the wonderful (and horrible) things that chatbots and generative AI are doing. NVIDIA stock is soaring based on the demand for AI processing in the data center.

However, more computing takes place outside the data center than in it. AI won't be confined to the data center. The myriad smart devices that surround us are getting much smarter. AI will make them more capable, easier to use, safer, and more secure.

Dealing with Advanced AI Algorithms

This presents a challenge for embedded-systems designers. AI algorithms, both training and inferencing, are very computationally complex. As AI algorithms advance, it increases their computational load. For example, state-of-the-art object recognition algorithms perform more than 100 times more multiply/accumulate operations than algorithms from just five years ago. AI algorithm complexity is increasing much faster than the advancement of processor or silicon capabilities.

How can this type of algorithm be added to devices that typically have constrained power and compute budgets? The answers, and there are many, are as varied as the embedded systems themselves.

Some systems can send inference computations off to a data center. Usually, the AI inputs, often sensor data, are relatively small, as are the outputs from the AI computation. Thus, the communication overhead is small. If a device has a reliable communication channel with a data center and doesn’t have hard real-time requirements, then offloading the work to remote computational resources can be an ideal strategy.

Other systems may not be able to send the computations out. Some systems, such as those incorporating autonomous mobility like a self-driving car or a self-piloted drone, have hard real-time requirements that can’t be met by remote computations. Other systems may have privacy or security requirements that preclude sending data off the device. These systems must perform the computations using local compute resources.

>>Check out the Generating AI and AI on the Edge TechXchanges for similar articles and videos.

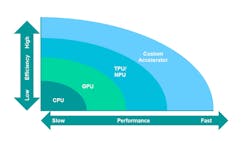

Some systems' requirements for their responses are neither real-time nor "real-fast." Inferences on these systems can be run on a (relatively) slow processor onboard. Many embedded processors have added features that support AI algorithms, such as vectorized multiply/accumulate instructions. However, based on the serial nature of the programming model and limited parallelization, the speed at which a processor can perform AI computations is limited (Fig. 1).

Systems that require more performance or efficiency than is possible with the processors can use GPUs to accelerate computations. GPUs are able to process arrays in parallel, improving both performance and efficiency over a CPU.

Neural processing units (NPUs, also known as tensor processing units or TPUs) process arrays in parallel like a GPU, but they’re more highly specialized to processing AI workloads. GPUs and NPUs tend to be physically large and power-hungry, as they’re often focused on performance. Diminutive embedded or edge NPUs that target efficiency are available and will be more suitable for constrained edge devices.

The Next Level of AI Accelerator

Certain systems need to deliver performance or efficiency beyond what’s achievable with the best available AI accelerators. Then there are those systems with extreme efficiency requirements; they could be running off a watch battery or even energy harvested from their environment. Other systems may have performance requirements that exceed what’s practical to obtain with off-the-shelf accelerators. For example, some autonomous mobility systems have real-time requirements measured in single-digit milliseconds.

These systems will require the development of customized hardware accelerators. Such accelerators could be implemented in ASIC or FPGA logic. It may seem daunting to contemplate creating an accelerator that could significantly outperform the highly optimized implementations from companies like Google and NVIDIA with large, well-equipped, and experienced development teams.

However, general-purpose AI accelerators need to appeal to as large a market as possible. They need to garner as many design wins as possible. Therefore, these accelerators need to handle any neural network that exists today and any network that might be created over the next few years. They must be very generalized, which significantly limits their performance and efficiency.

Customization vs. Programmability

One challenge that engineers must contend with is balancing the level of customization against the programmability and generality of their design. A highly customized implementation can deliver the highest performance and efficiency. By including only those capabilities needed by a specific neural network and sizing the features to match that exact algorithm, significant amounts of hardware can be pruned from the implementation.

However, that introduces risks of not being able to adapt to changing requirements or new applications. For devices needing the highest level of performance and efficiency, though, this kind of customization may be essential. The remainder of this article will focus on these types of designs.

Boosting Performance Doesn’t Necessarily Mean Increased Power Consumption

Often, performance and power are thought of as opposing goals. Most design decisions that improve performance will increase power consumption. While most mechanisms that reduce power will correspondingly reduce performance, it’s not always the case.

AI algorithms are developed in machine-learning frameworks like TensorFlow and Caffe, typically in Python. These frameworks use 32-bit floating-point representation. However, most values employed in AI algorithms, the features and the weights, are often normalized to be concentrated between -1.0 and 1.0. This leaves a huge range of the number space largely unused.

Google developed a 16-bit floating-point representation called brainfloat, or bfloat for short (or BF16, for even shorter), specifically targeting smaller, faster AI hardware. Fixed-point representations can be utilized, which allow for integer operators that are smaller and faster than floating-point operators.

"Posits" is another numeric representation targeted at small AI systems developed about five years ago by Gustafson and Yonemoto. Posits concentrate the representation of numbers near 0, while larger numbers are represented with less accuracy, taking up less space and using less energy. This representation works particularly well with AI algorithms.

Changing the numeric representation will change the math and, therefore, the accuracy of the inferencing and training. Before committing this to hardware, those mathematical changes need to be understood and verified.

A smaller, more efficient representation still needs to meet the application's accuracy requirements. Verifying the accuracy in the context of a machine-learning framework will not work, as the operations will not have bit-for-bit fidelity with the hardware to be implemented.

Doing the verification in a hardware description language is impractical, as a nearly complete HDL implementation must be in place before the verification can begin. Furthermore, HDL simulations run slow enough to make algorithm-level verification impossible when using software simulators. FPGA prototypes or emulation might deliver the performance needed, but they must have an FPGA port for the algorithm's implementation. Given that multiple iterations are required to find an optimal quantization, HDLs are a bit impractical for this exploration.

One approach is to use bit-accurate data types and operators defined in a high-level language. The algorithmic C, or "AC,” datatypes defined for high-level synthesis meet this demand. These C++ classes define both data types and math operators that have the full fidelity of an HDL, including truncation/rounding, overflow/underflow, and saturation effects. Such data types work in algorithmic C++ definitions that are much more abstract than HDLs and run thousands of times faster. With these data types, it’s possible to evaluate the impact of different numeric representations on neural-network performance and accuracy.

Optimizing PPA with Smaller Multipliers

The reason it’s so important to properly quantize the hardware implementation of the accelerator is that it has a huge impact on power, performance, and area (PPA). Floating-point multipliers are typically about twice as large as an equivalently sized integer multiplier. The area and power consumed by a multiplier is roughly proportional to the square of the size of the operands.

If a 32-bit floating-point representation is replaced by a 10-bit fixed-point representation, the multipliers will be about 95% smaller. The design could simply be smaller and more energy-efficient, or 20 multipliers could be put in place of the one floating-point multiplier, and the calculations would take place 20X faster on roughly the same silicon area and power.

Beyond the silicon area savings, smaller multipliers have a shorter propagation delay. This can enable a faster clock rate for the design, improving performance. In addition, the data needs to be moved from memory to the processing elements. If the numeric representation of the data has fewer bits, then it will take proportionally fewer bus cycles to move the data and proportionally less memory to hold the weights. Weight databases are large, storing and moving those weights has a very large impact on performance and power.

After quantizing the network with an optimal numeric representation, an RTL implementation is needed. It’s used to create a more accurate measure of performance, as well as feed downstream flows that will provide area and power metrics. And, of course, in an ASIC or FPGA flow, the RTL is employed for implementation.

When realizing the accelerator in RTL, many design decisions must be made that impact performance and efficiency. These decisions include sizing local memories, defining data paths, and implementing parallelism. Because the implementation will be highly tailored to the specific network being supported with challenging performance or energy targets, it’s essential that multiple architectures be considered.

Leveraging High-Level Synthesis for RTL Implementations

With a starting point of a C++ algorithm that uses bit-accurate AC data types, high-level synthesis (HLS) can be used to create the RTL implementation. Rather than manually creating multiple architectures of the accelerator, HLS can quickly synthesize a variety of design alternatives. HLS tools will provide estimates for power, performance, and area.

Though the HLS synthesis process creates the RTL, the key design decisions remain in the hands of the hardware designer. Certain aspects of the accelerator can either be left as programmable to future-proof the design or fixed to optimize the implementation. Programmability and generalization are very desirable design characteristics, but they have very real costs in terms of power and energy consumption. With an HLS flow, designers can make informed decisions about alternative architectures, with clear insights into the differences and their impact.

HLS is an established technology that can be applied to create a bespoke AI accelerator from C++ or SystemC descriptions. Further automation is available to bridge the gap between Python and C++.

HLS4ML is an open-source project that performs conversions from Python descriptions into C++, suitable for synthesis through HLS. It has features for exploring quantization, parallelization, and resource sharing in the implementation. It was developed by engineers at Fermi Labs to implement a customized FPGA AI accelerator that’s used to identify sub-atomic particles created from reactions in their particle accelerator.

Fermi’s engineers need to perform real-time identification of specific particles generated in a high-energy atomic collision. They have a fraction of a millisecond to determine if the desired reaction occurred and then save gigabytes of sensor data. The demanding performance requirement is well beyond the capabilities of even the fastest commercially available AI accelerators.

Tailored Accelerators Help Reach Required Performance Levels

AI is becoming ubiquitous in the data center and beyond. For many applications, AI frameworks on running on general-purpose embedded processors will work fine. For others, a GPU or TPU is needed.

Some systems, due to technical requirements or competitive pressures, will need higher levels of performance or efficiency, or some combination of the two. Achieving this can be done by implementing a customized hardware accelerator. A tailored accelerator can exceed the performance and efficiency of leading TPU or NPU accelerator devices or IP by an order of magnitude or more. The need to quantize the AI algorithm and explore architectural alternatives makes HLS an ideal approach to deploying AI into the most demanding applications.

>>Check out the Generating AI and AI on the Edge TechXchanges for similar articles and videos.

About the Author

Russell Klein

Program Director, Program Director, High-Level Synthesis Division, Siemens EDA

Russell Klein is a program director at Siemens EDA's High-Level Synthesis Division focused on processor platforms. He holds a number of patents for EDA tools in the area of SoC design and verification. Klein has more than 25 years of experience developing design and debug solutions, which span the boundary between hardware and software. He’s held various engineering and management positions at several EDA companies.