Bigger Might Not Be Better When It Comes to AI on the Edge

What you’ll learn:

- Why it’s important not to over-engineer.

- Equipped with suitable hardware, IDEs, development tools and kits, frameworks, datasets, and open-source models, engineers can develop ML/AI-enabled, edge-processing products rather easily.

Machine learning (ML) and artificial intelligence (AI, of which ML can be considered a subset) have historically been implemented on high-performance computing platforms and, more recently, in the cloud. Now, however, both are increasingly being deployed in applications where processing is performed near the data source.

Ideal for IoT devices, such edge processing means less data needs to be sent to the cloud for analysis. Benefits include improved performance thanks to reduced latency and better security.

Machine Learning on the Edge

ML/AI takes edge processing to the next level by making at-source inference possible. It enables an IoT device, for example, to learn and improve from experience. Algorithms analyze data to look for patterns and make informed decisions with three ways in which the machine (or AI) will learn: supervised learning, unsupervised learning and reinforced learnings.

Supervised learning sees the machine using labeled data for training. For instance, a smart security camera might be trained using photos and video clips of people standing, walking, running, or carrying boxes. Supervised ML algorithms include Logistic Regression and Naive Bayes classifiers, with feedback required to keep refining the model(s) on which predictions will be made.

Unsupervised learning uses unlabeled data and algorithms such as K Means Clustering and Principal Component Analysis to identify patterns. This is well-suited for anomaly detection. For instance, in a predictive maintenance scenario or medical imaging application, the machine would flag situations or aspects of the image that are unusual based on the model of “usual” built and maintained by the machine.

Reinforced learning is a “trial and error” process. As with supervised learning, feedback is required but, rather than simply correcting the machine, the feedback is treated as either a reward or a penalty. Algorithms include Monte Carlo and Q-learning.

In the above examples, a common element is embedded vision, which is made “smart” by adding ML/AI and any other applications that can benefit from vision-based, at-source inference. Moreover, smart embedded vision can utilize parts of the spectrum not visible to the human eye, such as infrared (used for thermal imaging) and UV.

Provide an ML/AI edge system with other data, such as temperature readings and vibration levels from transducers, and industrial IoT devices can not only play a significant role in an organization’s predictive-maintenance strategy, but they’re also able to provide early warnings of unexpected failure and thus help protect machinery, product, and personnel.

Embedded Systems and Machine Learning

As mentioned in our opening gambit, ML/AI initially required considerable computing resources. Today, depending on the complexity of the machine, components more associated with embedded systems (such as IoT devices) can be used to implement ML and AI.

For example, it’s possible to implement image detection and classification in field-programmable gate arrays (FPGAs) and on microprocessor units (MPUs). Moreover, relatively simple applications, such as vibration monitoring and analysis (for predictive maintenance, for instance), can be implemented in an 8-bit microcontroller unit (MCU).

Also, whereas ML/AI initially required highly qualified data scientists to develop algorithms for pattern recognition—and models that could be automatically updated to make predictions—this is no longer the case. Embedded-systems engineers, already familiar with edge processing, now have the hardware, software, tools, and methodologies at their disposal to design ML/AI-enabled products.

In addition, many models and data for machine training are freely available and a host of IC vendors offer integrated development environments (IDEs) and development suites to fast-track ML/AI application creation.

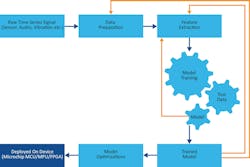

Take, for example, Microchip’s MPLAB X IDE. This software program incorporates tools to help engineers discover, configure, develop, debug, and qualify embedded designs for many of the company’s devices. A machine-learning development suite plug-in enables ML models to be flashed directly to target hardware. This suite uses so-called automated machine learning (AutoML), which is the process of automating many time-consuming and iterative tasks, such as model development and training (Fig. 1).

Though these iterative steps can be automated, design optimization is another matter. Even engineers with experience of designing edge-processing applications may struggle with some aspects of ML/AI. Many tradeoffs will need to be made between system performance (largely driven by model size/complexity and the volume of data), power consumption, and cost.

Regarding the last two, Figure 2 illustrates the inverse relationship between (required) performance and cost, and it indicates power consumption for Microchip device types used in typical ML inference applications.

Designing Machine Learning for Small Form Factors

As mentioned, even 8-bit MCUs can be used for some ML applications. One factor making this possible and doing much to bring ML/AI into the engineering community is the popularity of tinyML, which allows models to run on resource-limited micros.

We can see the benefits of this by considering that a high-end MCU or MPU for ML/AI applications typically runs at 1 to 4 GHz, needs between 512 MB and 64 GB of RAM, and uses between 64 GB and 4 TB of non-volatile memory (NVM) for storage. It also consumes between 30 and 100 W of power.

On the other hand, tinyML targets MCUs that run at between 1 and 400 MHz, have between 2 and 512 kB of RAM, and use between 32 kB and 2 MB of NVM for storage. Power consumption is typically between 150 µW and 23.5 mW, which is ideal for applications that are battery-powered, rely heavily on harvested energy, or are energized through induction.

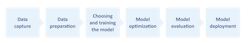

Keys to implementing tinyML lie in data capture and preparation in addition to model generation and enhancement. Of these, data capture and preparation are essential if meaningful data (a dataset) is to be made available throughout the ML process flow (Fig. 3).

For training purposes, datasets are needed for supervised (and semi-supervised) machines. Here, a dataset is a collection of data that’s already arranged in some kind of order. As mentioned, for supervised systems, the data is labeled. Thus, for our smart security camera example, the machine would be trained using photos of people standing, walking, and running (amongst other things). The dataset could be handcrafted, though many are commercially available—for example, MPII Human Pose includes around 25,000 images extracted from online videos.

However, the dataset must be optimized for use. Too much data will quickly fill memory. Insufficient data and the machine will either fail to make predictions or make erroneous or misleading predictions.

In addition, the ML/AI model needs to be small. In this respect, one popular compression method is “weight pruning,” in which the weight of the connections between some neurons within the model are set to zero. This means the machine needn’t include them when making inferences from the model. Neurons can also be pruned.

Another compression technique is quantization. It reduces the precision of a model’s parameters, biases, and activations by converting data in a high-precision format, such as floating-point 32-bit (FP32), to a lower-precision format, say an 8-bit integer (INT8).

With an optimized dataset and compact model, a suitable MCU can be targeted. In this respect, frameworks exist to make this easier. For instance, the TensorFlow framework/flow allows for picking a new TensorFlow model or retraining an existing one. This can then be compressed into a flat buffer using TensorFlow Lite Converter, loaded onto the target and quantized.

Developing ML/AI-Enabled Edge-Processing Products

ML and AI use algorithmic methods to identify patterns/trends in data and make predictions. By placing the ML/AI at the data source (the edge), applications can make inferences and take actions in the field and in real-time, which leads to a higher-efficiency system (in terms of performance and power) with greater security.

Thanks to the availability of suitable hardware, IDEs, development tools and kits, frameworks, datasets, and open-source models, engineers can develop ML/AI-enabled, edge-processing products with relative ease.

These are exciting times for embedded systems engineers and the industry as a whole. However, it’s important not to over-engineer. Much time and money can be spent developing applications that target chips with more resources than necessary, and which therefore consume more power and are more expensive.