There’s huge interest in implementing neural-network inference at “the edge,” anything outside of data centers, in all sorts of devices from cars to cameras. However, so far, very little actual deployment has taken place and system designers have a steep learning curve. As a result, several key misconceptions surround what makes a good inferencing engine and, most importantly, how to measure them to determine which one will perform the best. This article will dispel those misconceptions and explain how engineers can accurately compare one solution over another.

Most AI processing now is in data centers on Xeons, FPGAs, and GPUs, none of which were designed to optimize neural-network throughput. Neural-network development, or training, will continue to take place on floating-point-intensive hardware.

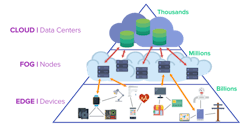

AI implementation is shifting to outside the data center and into edge devices.

Inferencing is the execution of a neural model that has been trained. It’s done with integer arithmetic, after quantizing the floating-point model, to get more performance out of lower-cost and lower-power inference accelerator chips.

Misconception #1: TOPS is a good metric for comparing inference accelerators.

Dozens of companies are promoting inference accelerator chips and IP, and almost all of them have some mention of their TOPS and TOPS/watt (TOPS = trillions of operation/second). Unfortunately, TOPS has become a marketing metric where the definition of an Operation isn’t clear, peak performance is used, and operating conditions aren’t given.

One definition of an Operation is that it’s either one addition or one multiplication, of unspecified size. Multiply-accumulate (MAC) is the repetitive operation that makes up the vast bulk of the arithmetic in an inference engine. However, some suppliers count other operations as well so as to boost their TOPS.

TOPS is a peak number. It’s the number of operations if the necessary data has been loaded in time into the MAC unit. However, most architectures are unable to get activation and weight data delivered in time to avoid stalls. What you really want to know is the actual percent utilization of the hardware to do useful work.

Also, TOPS doesn’t mention batch size. However, for many architectures, the throughput at Batch = 1 (which is what matters outside of the data center) is well less than half of the throughput at Batch = 10 or 28.

Like any semiconductor, performance and power varies significantly from minimum to maximum voltage; from slow to fast silicon; and from lowest temperatures to maximum temperature. TOPS is typically given for optimal conditions, not worst case.

The right answer is to ask inferencing engine suppliers to give you throughput on their architecture for a specific model (e.g., YOLOv3), a specific image size, a specific batch size, and specific process/voltage/temperature conditions. This gives you the data you really need to know for your application.

Misconception #2: Existing architectures will make the best inference accelerators.

For decades, computing has been dominated by the Von Neumann architecture: A central processing unit (or now multiple ones) is fed by a single memory system that has evolved into multiple layers of on-chip caches and one or more layers of external memory, typically DRAM.

In traditional workloads, the locality of code and data mean most memory references are served from on-chip SRAM cache at low latency and low power compared to DRAM. However, the workload for neural-network inference is very different.

Caches are of no use with the bulk of the memory references, which are the weights used in the successive matrix multiplies. For example, YOLOv3, which does object detection and recognition, has over 100 stages or layers and uses 62 million weights.

To process one image requires bringing in the 62 million weights in sequence, layer by layer, and then starting over again for the next image to be processed. Either all weights are stored on chip, or all weights need to be continuously reloaded from DRAM.

As a result, many early inference accelerators require very wide DRAM buses with hundreds of gigabytes/second of bandwidth. However, DRAM memory references are very high power compared to SRAM. The best inference architecture will likely figure out how to reduce DRAM bandwidth and use more on-chip SRAM bandwidth.

Misconception #3: ResNet-50 is a good benchmark for comparing inference accelerators.

Few companies give any benchmark information for their inference architectures, and when they do, it usually is ResNet-50. ResNet-50 is an image classification model that classifies images of 224 × 224 pixels. However, if you talk to anyone designing neural-inferencing engines, they will tell you they have no plans to use ResNet-50 in their applications.

A major problem with Resnet is that the ResNet-50 throughput is almost always given with no mention of batch size. Hint: When a vendor doesn’t give batch size, you can assume it means they’re using a large batch size to get highest throughput. If you need batch = 1, a large batch size won’t tell you how the performance will be for your application.

Also important to remember that 224- × 224-pixel images are tiny. They only have 1/40th the number of pixels of 2-Mpixel images that most customers plan to use.

Small images mean that the intermediate activations will be small. This means the memory system of the inferencing architecture will not be stressed by this benchmark. YOLOv3 has intermediate activations as large as 50X bigger than ResNet-50. ResNet-50 only requires 7 billion operations (1 MAC = 2 Ops) compared to >100x more for YOLOv3.

If your application is processing large images and needs to classify all of the objects in the image, you need to find a more demanding benchmark for your vendors to provide to you.

Misconception #4: One architecture will dominate all inference applications.

Inference architectures will differentiate over time on two dimensions. One dimension will be small (4 or less) batch size for edge applications and another will be large (10 or 28 or more) batch size for data-center applications.

The other dimension will be performance. Data-center inference accelerators will tend to have 100+ TOPS peak performance with more than 100K MACs running at 1 GHz+. Edge servers will be 10+ TOPS peak performance, edge devices will be 1+ TOPS peak performance, and always-on applications will be <<1 TOPS. There are two reasons for this: The thermal cooling in higher-end systems is much greater than in low-end systems, and the budgets are less in low-end systems.

Conclusion

Like the arrival of many new technologies in the past, AI and neural inferencing will follow a learning curve until the industry really understands how to properly measure them. That means most vendors will continue throwing out meaningless numbers that sound good but aren’t relevant.

If you find yourself looking at neural-inferencing engines in the near term, just remember that what really matters is the throughput an inferencing engine can deliver for a model, image size, batch size, and process and PVT (process/voltage/temperature) conditions. This is the number one measurement of how well it will perform for your application. Nothing else matters.

Geoff Tate is CEO and Co-Founder of Flex Logix Technologies Inc.