If you look at the current literature and today’s products, the most troublesome characteristic to measure seems to be jitter. The formal definition of jitter, given in the Sonet specifications, is the “short-term variation of a digital signal’s significant instants from their ideal positions in time.”

Jitter can also mean unwanted variations in pulse interval, phase, pulse width, and even amplitude. It’s usually caused by noise, crosstalk from other signals, and electromagnetic interference. The effect of too much jitter is an increase in the bit error rate (BER).

Jitter has always existed, though it was never a problem at lower frequencies with higher amplitude pulses. But due to increasing data rates and declining pulse amplitudes created by IC geometry limitations, the effect of jitter has been considerable. It’s particularly annoying in chips and systems with data rates exceeding 2 Gbits/s. The standards defining the serial interface often determine the amount of jitter and how it’s measured.

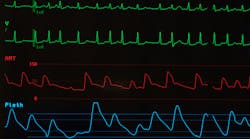

Measuring jitter is another problem. Several types of jitter, like random jitter (Rj) and deterministic jitter (Dj), together become total jitter (Tj). Each comes from different sources and is measured in a different way. A good way to see it is to display the data pulses in an eye diagram. The fuzzier the waveform, the greater the jitter.

A key issue is that there are numerous ways to measure jitter on a scope. It’s done in the software, of course. But different measuring methods provide different results. Therefore, know the standard you must comply with, and be sure that any scope you buy has jitter analysis software and eye-diagram display capability.