Backplane Optical Interconnects Deliver on Speed and Distance

Moving data at gigabit throughput is becoming more of a challenge for copper. Running digital signals with clock rates over 20 GHz for any distance requires fiber. Fiber is already used for system interconnects, and deployments like Verizon’s FIOS uses fiber for the last-mile connections. There have been custom fiber chip-to-chip and board-to-board interconnects, but the technology has become much more common for these applications.

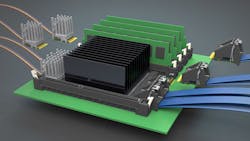

Standards like VITA 66.4 (see “Will Optics Spell The Death of the Copper Backplane?”) are driving vendors to deliver products like Samtec’s FireFly product family (Fig. 1). FireFly actually addresses copper and fiber with data rates up to 28 Gbits/s. The system uses a common connector to plug in modules that handle a dozen serial links via copper or fiber micro-ribbon cable. The system is protocol-agnostic and it can handle Ethernet, InfiniBand, FibreChannel, and PCI Express. The optical system uses a vertical-cavity surface-emitting laser (VCSEL) array.

FireFly is not the only solution available. Other companies have fiber solutions like TE Connectivity’s Coolbit that delivers 25 Gbit/s optical connections. TE’s Lightray MPX system can handle 24 fibers on 250 µm centers.

This file type includes high resolution graphics and schematics when applicable.

An example of a standards-based solution is Pentek’s 5973 3U VPX FMC carrier board. It supports the VITA 66.4 standard and uses a dozen fiber connections on the backplane connector via Samtec’s Firefly (Fig. 2). The Pentek 5973 board has an FMC socket and a Virtex-7 FPGA on-board. The optional VITA 66.4 support is connected directly to the FPGA’s multi-gigabit serial interfaces.

The Pentek 5973 board can handle most FMC cards. These normally provide a front-end interface often incorporating ADCs and DACs. Sometimes the FMC cards have high-speed serial interfaces. That is the case with Techway’s TigerFMC. This is a VITA 57.1 card that also takes advantage of Samtec’s FireFly optical connection (Fig. 3).

Different versions of the TigerFMC expose the optical interface on the front panel or via a cable for connection to the carrier board, a rear transition module (RTM) or backplane. The system can handle up to 14 Gbits/s per channel. The 28 Gbit/s per channel systems are in the works.

Intel’s Silicon Photonics is still a work in progress. This technology was designed to link servers and switches using fiber connections as the enterprise arena moves to 100 Gbit/s Ethernet and beyond. The system uses special MXC connectors like those available from TE and Molex (Fig. 4). These connections can be used with Molex’s 12-fiber VersaBeam POD (parallel optical device) module.

The high-density MXC connector is designed to handle up to 64 fibers. Other connector technologies top out around 24 fibers. A single-mode fiber can support distances up to 4 km. Transfer rates of 25 Gbits/s translates to 1.6 Tbits/s for a 64-fiber bundle. This bandwidth will be needed to handle the growing data center and cloud requirements. MXC employs expanded-beam lens technology. This can help reduce the impact of debris in the connection. It also requires less spring force for mating because of the greater tolerances allowed with this technology.

Copper continues to push the envelope, but it is getting harder and harder to meet the performance characteristics of fiber. There is no comparison if long-distance connections are involved.