Lies, Damn Lies, and Big Data: Election Results and More IoT

The political advertisements are gone (at least until next year), to be replaced by analysis and crystal ball forecasting of where things will head for the next four years. I’ll leave that to the experts in other areas, and instead concentrate on the takeaway from the fallout with respect to big data and the Internet of Things (IoT).

By the way, I am going to try to tie the election, polls, big data, and deep learning together with IoT.

The big surprise, other than the results, was from the pollsters and statisticians. Most showed Hillary Clinton ahead, and while the final results were different, they weren’t by as much as many would like you to think. Keep in mind that Clinton won the popular vote, even though Donald Trump won the Electoral College (which ultimately is what counts).

Even in the Electoral College the swing was minor, except for the winner-take-all way things work in most states. Pennsylvania (where I live) was worth 20 Electoral votes, and the difference in actual votes cast was on the order of 100,000. A difference of only 50,000 to make things a dead heat. The numbers are actually a tiny fraction of the total number of votes. Unfortunately, the number of registered voters that did vote in the election around the country is still a small fraction.

So, on to big data, where stats, polls, and the Internet of Things reside. The IoT means a lot of things to many people but one aspect of many IoT applications is the idea that many small devices can generate lots of data that goes into a big data pool—one that provides insight, feedback, and other features that would not be available without the connected collection of gadgets. The challenge with IoT and big data is that assumptions need to be made and factors need to be determined so that big data analysis can be addressed.

There is no single equation or process to analyze lots of data, and many algorithms are subjective or based on developers’ intuition. Tiny differences in weighting or in the analysis approach can have major effects in results. Likewise, interpretations of the results can be challenging especially when those doing the interpretation are far removed from the ones that created the analysis. This does not even address the issues of what data is being used and how it is obtained. This is true for IoT as well as election results.

Enter deep learning.

Deep neural networks (DNN) are the basis behind deep learning. This is one of the hottest topic areas in the GPU and supercomputing space where big data is being crunched. There are many frameworks available that can simplify a developer’s job without even understanding how the underlying system works, sort of like getting poll results.

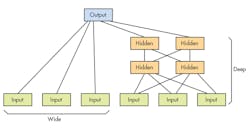

The thing is that DNN looks so easy to understand, at least from the diagrams (see figure). The results can be very impressive, like picking people’s face out of a crowd. The applications are staggering and there are companies being built around the functionality that DNN, big data, and IoT can deliver.

But like robotics, it is harder than it looks. I think understanding and using DNN is similar to functional programming. Wrapping your head around the concepts and really using them is very, very hard when moving from more conventional tools that operate using very different ideas. As Scotty says in Star Trek IV, “Damage control is easy. Reading Klingon—that’s hard.”

About the Author

William Wong Blog

Senior Content Director

Bill's latest articles are listed on this author page, William G. Wong.

Bill Wong covers Digital, Embedded, Systems and Software topics at Electronic Design. He writes a number of columns, including Lab Bench and alt.embedded, plus Bill's Workbench hands-on column. Bill is a Georgia Tech alumni with a B.S in Electrical Engineering and a master's degree in computer science for Rutgers, The State University of New Jersey.

He has written a dozen books and was the first Director of PC Labs at PC Magazine. He has worked in the computer and publication industry for almost 40 years and has been with Electronic Design since 2000. He helps run the Mercer Science and Engineering Fair in Mercer County, NJ.

- Check out more articles by Bill Wong on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter