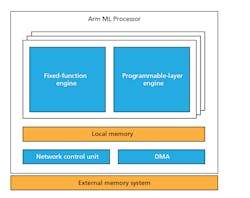

Arm’s Project Trillium addresses machine learning (ML) and it includes a number of components, including the new ML processor (Fig. 1). This platform will support the Arm CPU complexes in a fashion similar to the GPGPU support, like the Mali GPU family that’s already available and being used for many ML applications. The advantage of the ML processor over the CPUs and GPGPUs is its power efficiency and performance when applied to deep neural networks (DNNs).

1. Arm’s machine-learning subsystem uses two different engines to address the typical neural-network machine-learning algorithms.

Arm’s support isn’t surprising given the interest in ML and DNN. It’s also likely to be a technology that will be incorporated into hardware that already uses Arm processors, such as smartphones where audio and video interaction is becoming the norm.

The other piece to the project is the second-generation Object Detection (OD) processor. This is a fixed-function, vision pipeline designed to scan HD video streams in real time, up to 60 frames/s. Objects need to be at least 50 by 60 pixels. It provides a list of detected objects and attributes such as which way a person might be facing. The system can also detect gestures and poses. It’s an ideal complement to the ML processor.

The ML processor is able to operate standalone. It has an ACE-Lite interface so that it can be integrated with Arm’s DynamiQ cluster.

2. OD and ML processors developed by Arm will often be combined to processor video streams.

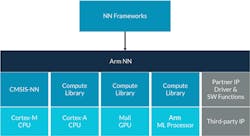

Designers will be able to combine Arm IP (Fig. 2) to create system-on-chip (SoC) platforms like Intel’s Movidius, which has a video-processing front end for handling streaming data from cameras, a set of ML processors, and a pair of RISC CPUs to manage the system.

The Arm Neural Network (NN) software development kit (SDK) allows developers to take advantage of ML support whether the support is provided by an Arm CPU, GPU, or ML processor (Fig. 3). It provides support for neural-network frameworks like Caffe and TensorFlow. The software takes advantage of Arm’s Compute Library.

3. Arm’s Neural Network (NN) software development kit bridges the interface between ML software and the underlying hardware, whether it’s an Arm CPU, GPU, or ML processor.

Arm’s new ML and OD processors are designed for ML applications on the edge, versus the training of DNNs that’s typically done in the cloud using ML hardware like Google’s tensor processing unit (TPU) and Nvidia’s Tesla V100. Google’s TPU board (Fig. 4) is designed for the cloud.

4. Google’s GPU board can be used to process data sets to train DNNs. The training information can then be employed on ML platforms on the edge of the internet.

Many ML applications run in the cloud, such as Amazon’s Alexa that ships voice streams for analysis in the cloud. Moving ML inference hardware support to the edge will allow this type of processing to be done locally, which will be key for standalone operation. Devices like Amazon’s Echo will not work without a connection to the internet and the Alexa servers in the cloud.

Arm hasn’t released all of the details, but we do know the theoretical efficiency of the ML processor is on the order of 4.6 teraoperations per second (TOPS)/W with a target power envelope of 3 TOPS/W using 7-nm implementations. Arm partners will be gaining access to the IP this year.