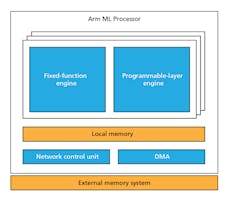

Arm is definitely targeting deep-neural-network (DNN) machine-learning (ML) applications with its proposed hardware designs, but its initial ML hardware descriptions were a bit vague (Fig. 1). Though the final details aren’t ready yet, ARM has exposed more of the architecture.

1. Arm’s original description of its machine-learning hardware was a bit vague.

The Arm ML processor is supported by the company’s Neural Network (NN) software development kit that bridges the interface between ML software and the underlying hardware. This allows developers to target Arm’s CPU, GPU, and ML processors. In theory, waiting for the ML hardware will allow a critical mass of software to be available when the real hardware finally arrives. Most of the differences between the underlying hardware are hidden by runtimes and compilers.

In a sense, this is the same approach Nvidia is taking with its CUDA platform that targets a range of its GPUs. These GPUs are similar, but each has slight differences hidden by the CUDA support. Having their own framework makes it difficult for developers to move to different platforms. CUDA handles more than just artificial-intelligence (AI) and ML applications. Developers targeting higher-end ML tools like TensorFlow can usually move from platform to platform including Arm’s ML processor.

Nvidia’s NVDLA

Nvidia has also released the NVIDIA Deep Learning Accelerator (NVDLA) as open-source hardware. NVDLA is used in the company’s Xavier system-on-chip (SoC) designed for automotive and self-driving car applications.

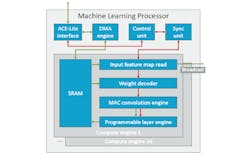

2. The machine-learning processor developed by Arm consists of an array of compute engines with MAC convolution and programmable layer engines.

The ML processor incorporates an array of up to 16 sophisticated compute engines (Fig. 2). Each engine has its own local memory that’s used by the modules to process DNN models. The flow is typical for DNN implementations, starting with weights being applied to incoming data, processing via the MAC convolution engine, and then results processed by the programmable layer engine (PLE). The MAC convolution engine has 128 multiple-accumulate (MAC) units.

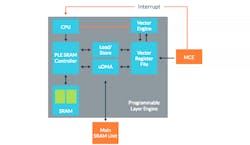

The PLE (Fig. 3) is a vectorized microcontroller that processes the result of the convolution. It’s more like a RISC platform optimized for wrapping up the processing of a layer for a section of a DNN model that has many layers. The processing requires many iterations through the system, which will be processing sections in parallel. The PLE handles chores such as pooling and activation.

3. The Arm programmable layer engine is essentially a vectorized microcontroller designed to handle neural-network layer operations.

At TOPS Speed

Arm’s initial implementation is designed to deliver 4.6 tera operations/s (TOPS). The version designed for high-end mobile devices is designed to deliver 3 TOPS/W using 7-nm chip technology. The design is scalable, allowing it to be employed with Arm’s range of microcontroller and microprocessor designs. The system is designed to scale from 20 gigaoperations/s (GOPS) for the Internet of Things (IoT) to 150 TOPS for high-end enterprise applications. Of course, low-end platforms will not be as powerful, but will use much less power.

Part of the challenge of nailing down a final design is how to address details such as the type and magnitude of the weights involved in the DNN. The datatypes may be as small as one bit, and a number of tiny floating-point formats are being used in ML research.

More details are expected later this year. In the meantime, though, developers are already using Arm CPU and GPU platforms for ML tasks.