This file type includes high-resolution graphics and schematics when applicable.

New processors continually emerge from the fabs, but the nagging questions surrounding these recent arrivals are whether they’re actually faster, better, and/or more power-efficient. Generally speaking, the answer to all of them is yes. The degree of each depends heavily on what design aspects are important to your application as well as the path taken from older to newer architectures.

The low end of the spectrum has enormous growth potential—these processors tend to be on the trailing edge of transistor technology and can take advantage of the work done to develop high-performance systems. It may be a while before an 8-bit microcontroller has 3D FinFET transistors (see “16nm/14nm FinFETs: Enabling The New Electronics Frontier”); however, they’re already used with 32-bit designs.

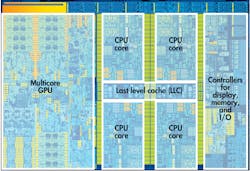

Intel's 6th-generation platform, formally known as Skylake, is the current king in the desktop and mobile PC space. The 14-nm chip incorporates a Gen9 GPU that takes up a significant amount of die space (Fig. 1). This reflects the importance of GPUs in interactive platforms: GPUs provide increased power since they’re not limited to just displaying graphics.

Multicore Movement

For practical purposes, heat and power have limited the top speed of a single core. Thus, designers use multiple cores to get more performance as transistors continue to shrink. The different system architectures affect the kinds of cores used and how many cores can be found on a single chip.

For example, Intel's Gen9 GPU is based on a subslice that contains eight execution units (EUs) along with L1 and L2 caches. The GT2-class GPU has a slice that consists of three subslices for a total of 24 EUs and an L3 cache. The design scales so that a GT3 has two slices with a total of 48 EUs and a GT4 has three slices with 72 EUs. The latter is found in Intel's Core i7-6700K and Core i5-6600K processors, along with an L4 cache.

High-end graphics cards integrate GPUs with even more processing power, but these newer processor chips with integrated graphics can match the performance of dedicated, low-end GPU cards. AMD's APUs take a similar approach by incorporating high-performance GPU support with the CPUs.

ARM Cortex-A5x and Cortex-A7x platforms are making inroads into the conventional PC space for a variety of reasons, including low-power requirements. Microsoft Windows and the x86 platforms remain a dominant force. However, Linux-based tablets, smartphones, and compact PCs are being fueled by the ARM Cortex-A family. Some platforms, like the Cortex-A72, target these mobile devices.

Though quad to octal core is the norm for consumer and many embedded platforms, the server side has been taking on cores with a vengeance. The AMD Opteron 6300 series includes up to 16 cores, while the latest Intel Xeon E5-2600 v4 family will feature up to 24 cores running 48 threads.

Initially, the top-end EP series will have 22 cores. The Broadwell-EP architecture is implemented on the latest 14-nm technology. Base clock rates were reduced, but each core delivers about a 5% performance increase. Therefore, more cores continue to deliver the major performance boost for the system.

Server platforms have differed significantly from their PC counterparts, with multichip solutions starting at 2P and 4P configurations employing a non-uniform memory access (NUMA) shared-memory configuration. In addition, each processor chip typically has four memory channels that support more dual-inline memory modules (DIMMs) to provide massive amounts of DRAM to the processor complex. Very large caches on the order of 50 MB help smooth out memory accesses.

Server platforms often have instructions or support to accelerate applications that are less common on the PC side, such as database servers. One example is Intel's Transactional Synchronization Extensions (TSX). TSX can often improve the number of database transactions per second by a factor of four and boost typical application execution by 40%. Instructions for Hardware Lock Elision (HLE) and Restricted Transactional Memory (RTM) are used to provide this support. They address optimistic locking and memory access.

Looking forward, Intel's server processor line will incorporate the Omni-Path interconnect fabric in the next generation and the Skylake technology already found in the PC side. Omni-Path is a switch-based fabric like InfiniBand, which has been popular in high performance computing (HPC). Inclusion in the processor simplifies scaling and interconnects in large systems common in public and private clouds.

Intel's Xeon Phi (see “ARMv8, GPUs And Knights Landing At ISC 2014”) will get a refresh this year. Its current incarnation already supports more 60 CPU cores.

The other elephant in the server room is AMD's 64-bit ARM-v8 platforms from companies like AMD, AppliedMicro, and Cavium (see “64-bit ARM Platforms Target the IoT Cloud” on electronicdesign.com). Though they have yet to challenge the top-end Xeon platforms in performance, they’re making a difference in the low- to mid-range server market as well as in specialized areas such as storage and network processing. These application areas are actually more common than the high-end systems.

AMD's Hierofalcon features up to eight 64-bit Cortex-A57 cores using 28-nm technology, while AppliedMicro's Helix also has up to eight 64-bit cores. Typically, 10 Gigabit Ethernet is available on-chip along with PCI Gen 3, which is the defacto standard on all platforms from PCs through servers.

Cavium's ThunderX delivers up to 48 cores and 100 Gigabit Ethernet. Like AppliedMicro, Cavium has SoCs with multiple SATA interfaces to address network storage applications. Gigabyte's H270-T70 (Fig. 2) motherboard includes two Cavium 2.5-GHz, ThunderX CN8890 SoCs with 48 cores each. The Cavium Coherent Processor Interconnect (CCPI) connects the two SoCs together for a total of 96 cores that have access to 1 TB of 2400-MHz DDR4 DRAM. Four boards fit into a 2U server slot to provide 384 cores and 4 TB of memory. Each node also has four hot-swappable SATA drives.

GPUs have come into their own as major compute platforms. Servers with multiple GPU boards are common for many HPC and specialized applications. It’s possible to have a blended CPU/GPU like those on the PC side. However, for servers, it usually makes more sense to dedicate silicon and board area to CPU or GPU support, enabling more power systems to be optimized for the application area. PCI Express is the usual interconnect mechanism.

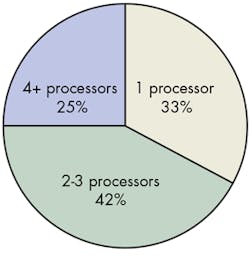

Barr Group's latest survey (see “What Does Your Company Do About Safety and Security?” on electronicdesign.com) indicates that embedded developers are using multicore solutions in two-thirds of the new projects (Fig. 3). It did not break out how these cores are connected and used, because scaling symmetrical multiprocessing (SMP) found in Intel and AMD CPUs differs from asymmetrical processing (AMP) in which cores are dedicated to different chores within the system. The cores could be the same, but are tailored to different jobs, such as a DSP core for a motor-control application or a packet processor to work on network traffic.

Processor Enhancements

Intel's Skylake delivers 2.5 times the productivity compared to a five-year-old mobile PC, let alone three times better battery life, 30 times better 3D graphics, and instant-on capabilities due to flash-memory support. It’s all very good, but one thing is missing—faster processor cores. The latest arrivals tend to be faster, but not by factors as significant as those just mentioned. Nonetheless, a lot has changed under the hood.

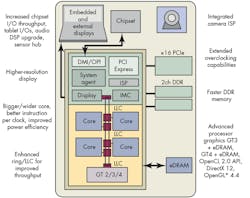

Of course, the GPU is one of the major changes with Skylake, but other key upgrades include eDRAM (Fig. 4). The eDRAM is on-chip memory that comes in 64- or 128-Mbyte versions. The approach is similar to AMD's high bandwidth memory (HBM) used on its latest Radeon R9 GPU (see “High-Density Storage”). It brings more, faster memory closer to the cores. Other platforms, such as IBM's POWER7, have incorporated eDRAM, too. Skylake uses an enhanced ring architecture to link eDRAM, the last level cache (LLC), and the CPU and GPU cores together.

AMD's A-series application processing units (APUs) targeted at PC applications now incorporate a security module based on an ARM Cortex-A5. The Platform Security Processor (PSP) implements ARM's TrustZone security technology that manages the host, x86 core, and GPU in an APU (see “Platform Security Processor Protects Low Power APUs”). Most processors intended for the PC market, and all for the server market, feature improved security technology.

On the server side, more cores is a given. However, the Xeon E5-2600 v4 also increases key encryption-algorithm performance by 70%. New instructions include ADCX/ADOX (large integer adds) and PCLMULQDQ (carryless multiplication quadword) help with algorithms like RSA, ECC, and Secure Hash Algorithm (SHA). There’s also a hardware random number generator on-chip.

Another major difference on the server side is resource management, which is normally automatic on the PC side as well as for many embedded platforms. An example is Intel's Cache Monitoring Technology (CMT). CMT tracks cache usage so that a system administrator could track per-VM (virtual machine) resource usage, making it possible to adjust scheduling policies and address contention issues if needed. It can also be used to deliver tiered service classes. Moreover, CMT could be beneficial in embedded applications with remote-management capabilities, or to guarantee cache support for high-priority applications.

Intel's Memory Bandwidth Monitoring (MBM) focuses on the L3/memory interface and provides similar tracking to CMT. NUMA optimizations can be addressed with information from MBM.

Common instruction sets allow many applications to run equally well on PC and server-class processors. As noted, though, major differences exist between the two. Newer processors are all reaping the rewards of lower power technologies and better security, but the method of implementation, scale of the support, and level of control differ significantly.

Server-class platforms benefit from additional CPU cores, and by separating the GPU, they can be added and scaled independent of the CPU cluster. There are advantages to the CPU/GPU combination, with the GPU improvements providing the biggest performance boost on the PC side. And don’t forget that the move to 4K displays will also drive higher-performance GPUs.

Looking for parts? Go to SourceESB.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.