Ultra-Low-Power Micro Tackles Video AI Chores

Not every new microcontroller has artificial-intelligence/machine-learning (AI/ML) acceleration built-in. It just seems that way. The trick for ultra-low-power applications is to get the required performance within the target power envelope.

Eta Compute’s ECM3532 is an ultra-low-power solution that not only runs on a single-cell battery for a very long time, but it’s capable of handling advanced AI/ML applications such as voice and image recognition. Though competing solutions reside in this space, doing the job and using as little power as Eta Compute’s MCU is no easy task.

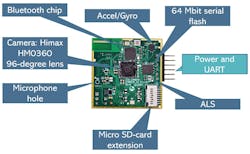

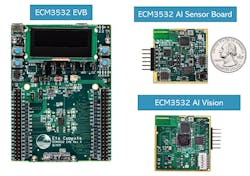

Eta Compute has made a tiny, 1.5- × 1.5-in. vision board available to developers (Fig. 1). The ECM3532 AI Vision Board can run off a CD2032 battery. The module contains a Himax camera and runs machine-learning algorithms on the video in real-time. Also included are a 3D accelerometer and gyro, a Texas Instruments OPT3001 ambient light sensor, a temperature sensor, plus a microphone for audio input. Bluetooth communication is part of the package. There’s even a buck converter in the 5- × 5-mm BGA package.

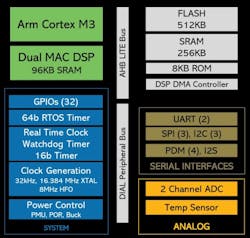

The ECM3532 system-on-chip (SoC) features two processor cores (Fig. 2). The 100-MHz Arm Cortex-M3 uses only 5 µA/MHz. The trick to consuming so little power is through the use of continuous voltage and frequency scaling (CVFS). CVFS is also employed with the CoolFlux DSP16 16-bit core that has a dual 16- × 16-bit MAC. In addition, the DSP maintains its own 32 kB of program memory and 64 kB of RAM. The DSP provides the heavy lifting for processing AI/ML models, although the Cortex-M3 core can help as well. The system has 512 kB of flash, 256 kB of SRAM and an 8-kB ROM boot loader.

The ECM3532’s two-channel, 12-bit, 200-ksample/s analog-to-digital converter (ADC) typically consumes 1 µW, with one channel tied to the module’s microphone. The chip can use this input option to capture audio streams to analyze voice commands or ambient sounds. This would utilize the same AI/ML acceleration that would be applied to the processing of video streams (Fig. 3).

Eta Compute’s TENSAI Flow tools and runtime make that possible. Developers can develop TensorFlow Lite models and download the trained models to the SoC. It’s able to handle distribution of models across the two processors when applicable. The TENSAI framework also includes TENSAI Systems and Sensors and TENSAI Algorithms and Neural Networks.

The 1.4- × 1.4-in. ECM3532 AI Sensor board and ECM3232 EVB evaluation boards round out the latest offerings (Fig. 4). The sensor board has a pair of microphones but forgoes the camera. The EVB provides access to all peripheral ports.

The ECM3532 is great for mobile applications because of its low power requirements. The ability to handle vision tasks like human presence, object detection, gesture detection, and voice interaction in such a small, low-power package opens up many options for developers. Eta Compute has also partnered with Synaptics to co-develop AI/ML support that will address Synaptics’ Katana Edge AI SoC as well, using Eta Compute’s TENSAI tools.

About the Author

William G. Wong

Senior Content Director - Electronic Design and Microwaves & RF

I am Editor of Electronic Design focusing on embedded, software, and systems. As Senior Content Director, I also manage Microwaves & RF and I work with a great team of editors to provide engineers, programmers, developers and technical managers with interesting and useful articles and videos on a regular basis. Check out our free newsletters to see the latest content.

You can send press releases for new products for possible coverage on the website. I am also interested in receiving contributed articles for publishing on our website. Use our template and send to me along with a signed release form.

Check out my blog, AltEmbedded on Electronic Design, as well as his latest articles on this site that are listed below.

You can visit my social media via these links:

- AltEmbedded on Electronic Design

- Bill Wong on Facebook

- @AltEmbedded on Twitter

- Bill Wong on LinkedIn

I earned a Bachelor of Electrical Engineering at the Georgia Institute of Technology and a Masters in Computer Science from Rutgers University. I still do a bit of programming using everything from C and C++ to Rust and Ada/SPARK. I do a bit of PHP programming for Drupal websites. I have posted a few Drupal modules.

I still get a hand on software and electronic hardware. Some of this can be found on our Kit Close-Up video series. You can also see me on many of our TechXchange Talk videos. I am interested in a range of projects from robotics to artificial intelligence.