New Memories Making Meaningful Strides

What you’ll learn:

- What is trending in embedded memory technology?

- Why ReRAM is important.

Every year, enormous effort is devoted to the development of new memory technologies, great advances are made, and fascinating new products and prototypes are introduced. So, why haven’t these new memories become commonplace? Just to clarify, we’re talking about memory technologies like MRAM, ReRAM, FRAM, and PCM. What’s happening with all of these memories?

For those unfamiliar with those acronyms, let’s spell them out. Just like today’s leading memory technologies—DRAM, NAND flash, NOR flash, SRAM, and EEPROM—and the technologies that preceded them, specifically mask ROM and EPROM, the names stem from how they store bits. For the new memories. it’s pretty straightforward:

- MRAM (magnetic RAM) uses magnetism to store the bit’s state just like a hard drive, but without any moving parts.

- ReRAM (resistive RAM) stores a bit as a resistance, either high resistance or a low resistance. It’s typically implemented as whether or not a metal filament runs through an insulator, or whether oxygen ions have been driven out of the insulator to form a conducting path.

- FRAM (ferroelectric RAM) is tricky, as it might imply that it’s made out of iron (“ferro”) or has magnetic properties similar to iron, but neither is the case. These memories simply exhibit a behavior whose I/V curve is shaped like the magnetization vs. field relationship of the magnetic hysteresis curve, so it got that name. These devices typically store a bit by displacing an atom within a crystal.

- Finally, PCM (phase-change memory) uses the phases of a material to store a bit. If the material solidifies in a liquid phase, then resistance is high. If it solidifies in the solid phase, as a crystal, then its resistance is low.

These aren’t really new technologies. FRAM has been around for over 70 years and PCM for over 50, but they’re still waiting at the door, hoping for a chance to get into the memory market in a big way.

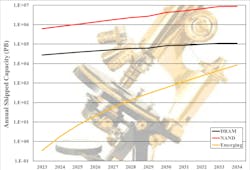

However, these non-volatile memories are starting to make a difference. In fact, the market is growing at a rate that should bring it to revenues of $72 billion by 2034, with bit growth outstripping established technologies to bring it closer to the realm dominated today by NAND flash and DRAM (see figure).

Big Things are Happening in Embedded Memory

The biggest strides are being made in embedded memory, the memory in system-on-chips (SoCs)—microcontrollers, ASICs, and other chips that have been using NOR flash since it was invented in the 1980s.

Why would this be? Well, with the advent of the FinFET at 14 nm, flash memory was no longer an option. Of course, even if CMOS logic hadn’t moved to a FinFET structure, 14 nm was out of the reach of planar flash anyway, for reasons we won’t go into here. So, what were designers to do when they wanted on-chip nonvolatile memory?

For the short term, they took the memory off-chip and started using external SPI (Serial Protocol Interface) NOR chips to store programs, loading it into on-chip SRAM caches at boot-up. This works well, but it adds cost and consumes space.

The longer-term solution is to use a memory that can scale to processes smaller than NOR’s 28-nm limit. Today, those are MRAM and ReRAM, but mostly MRAM. While that may change in the future, today MRAM is king, and it’s starting to be seen in a number of wearable applications for health monitoring and other similar functions. Larger foundries offer it as an option to their standard CMOS logic processes, and more forward-thinking designers are embracing it with good results.

One key benefit is that the SoC’s on-chip firmware can power down the memory, waking it up only when needed, saving a lot of energy during the time it’s turned off. This is a benefit supported by all of these new technologies.

But something bigger is soon to happen. Like NOR flash, SRAM is also running into scaling issues. SRAM isn’t shrinking as fast as the logic, and that prevents chips from shrinking in proportion to the process technology. This makes SRAM more and more expensive as time moves on.

While new memory technologies don’t suffer from this problem, they’re slower than SRAM. As a result, systems designers need to make some difficult decisions about how much nonvolatile memory (MRAM, ReRAM, FRAM, or PCM) to put onto the chip, and how much SRAM to use to cache this new memory for the performance to still reach its goals.

Cache design is always a challenge, because sometimes big and slow beats fast and small. Even if SRAM scaled, this would be a concern, since new memories only require a single transistor to work, whereas SRAM needs six. Add to this the fact that firmware can also be optimized to the memory configuration, and you have quite the challenge due to so many variables.

In short, embedded SRAM is also threatened with replacement by a new memory. Such change is starting to move pretty fast. With it comes the promise of persistent memory in processor caches, and considerably lower energy consumption even in servers, bringing an altogether new look to computing of every kind.

Today MRAM is king, but ReRAM is poised to take its place. It’s too early to know whether ReRAM or MRAM will win out as the dominant new non-volatile memory. However, if something interesting happens with FRAM or PCM, it could change everything.

Yes, But What of FRAM and PCM?

FRAM has been around for a very long time, since 1952, but it still hasn’t gained much prominence, even though it has, by far, out-shipped all other new memory technologies combined. That’s a surprise, because it’s rarely discussed.

FRAM has very low write energy. This won it a design for commuter train fare cards, where the value stored on the card is updated using only the power of the interrogating RFID signal. While FRAM holds this distinction in unit shipment numbers, the very tiny size of these chips has prevented the technology from consuming many wafers. Consequently, its process isn’t anywhere nearly as well understood as the largest technologies: DRAM and NAND flash.

But FRAM is moving from exotic materials that threatened to contaminate wafer fabs to a material that everyone knows and understands well: hafnium oxide. Certain problems still need to be worked out, but this should happen in good time.

As for PCM, well it’s a good technology, first publicly presented in a 1969 article by Gordon Moore and Ron Neale, and later produced and sold by Intel, Samsung, and STMicroelectronics. It went on to be the basis of 3D XPoint Memory behind Intel’s Optane products. But after losing close to $10 billion for Intel, the company decided that enough was enough.

With its Optane push, Intel produced more PCM wafers than all other emerging memory technologies combined. It would seem, then, that the process should by now be well understood.

It may well be, but we have seen no signs of any companies licensing it from Intel, so we’re forced to wonder if it will be adopted in the future.

How about Discrete (Standalone) Memory Chips?

Today’s discrete memory versions of MRAM, ReRAM, and FRAM chips are largely relegated to niches because they’re a couple of orders of magnitude costlier than mainstream technologies. Imagine going to your boss and saying: “I designed in a more expensive memory.” When the boss asks for a good explanation of “Why?,” you might not be able to come up with one, unless your system is space-bound (new memories withstand a lot more radiation than standard memories) or some other attribute like power makes the decision an expensive but imperative necessity.

But once costs come into line with mainstream memories, bosses around the world will be asking why engineers did not use one of these technologies.

What will it take to bring costs into the mainstream level? It comes down to wafers processed.

Memories are enormously sensitive to the economies of scale. If you make an enormous number of a particular chip, it ends up being cheaper than something that should be cheaper because it has a smaller die size. Optane proved that point.

What’s going to drive a high wafer volume? Embedded memories should do the trick. Remember, it’s not how many bits ship, or how many chips. It’s how many wafers get processed with the technology, and embedded memories seem to be the starting point at which all of this will happen.

Recent Happenings in the Memory Arena

Although our new memory report focuses on a much larger scope than this, many things have proven that these new memories are well on their way to changing the computing landscape.

First, leading fitness monitor makers, hearing aid companies, and other lifestyle devices are starting to try out new memories as the embedded memory in their SoCs. Meanwhile, lots of interest surrounds these memories in automotive applications because of their wide operating temperature ranges and low energy consumption, as long as they’re inexpensive—and they’re getting there.

Meanwhile, we know of two DRAM designs that have tapped into FRAM from Intel a few years ago, and Micron in December 2023. It’s been over 20 years since one of us published a report proposing that FRAM might move into DRAM’s market. The steps for that are finally falling into place, though.

Lots of ReRAM activity is also taking place. Weebit Nano methodically moved forward to make ReRAM a manufacturable technology, and may prove that it’s good management that wins markets, not just good technologies.

However, it’s still too early to name a winner in this battle. MRAM is on top now, with ReRAM chasing close behind. FRAM has an advantage with the widespread use of hafnium oxide, and should anyone pick up all of the technology and expertise that Intel put into 3D XPoint, then PCM could be a surprise winner.

The best anyone can do today is closely watch the developments in this market and be ready to adopt the technology that appears to be pulling ahead of the others. We naturally recommend reading our report, "A Deep Look at New Memories," to understand all of this in depth.