1970s: Microprocessors—The Little Engines That Could

Radicalism was in the air in 1970. The Vietnam war wasn't gaining much support on college campuses. In the streets, the flower-power Hippies had been shoved aside by angry, politicized Yippies, who took to the protest barricades to hurl scorn—and a couple of rocks—at the Establishment. Nixon's White House wasn't having any of it, though, and battle lines were drawn here at home. The "enemies list" that resulted set the tone for a presidency that ended in defeat and disgrace in the Waterloo known as Watergate.

But radicalism wasn't confined to the streets. In the engineering labs of semiconductor manufacturers, radical ideas were taking shape. The design, and even the basic concept, of electronic systems had rushed headlong to a defining moment. In the pre-IC days, transistors were employed to build logical functions, taking the place of the vacuum tube and improving on many of its shortcomings. When transistors were combined on ICs, they represented a great step forward in miniaturization and flexibility.

The time was right for the next great step. What if circuitry could be made programmable? Why should we settle for circuits that could only do the single function they were designed to do? And what if we could put a whole lot more of the circuitry on a single silicon substrate?

Those concepts, realized in the microprocessor, are the fulcrum upon which almost all electronic advances since have rested. In 1971, a Japanese manufacturer named Busicom contracted Intel to produce the chips for its new programmable calculator. The team of Intel engineers convinced the company that its ideas were too cumbersome for the usual logic techniques.

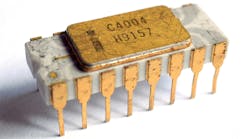

The team argued that the design was best realized as three ICs: a central processing unit (CPU), or "brain," and two memory chips. The result was the 4004 microprocessor, a 4-bit device whose descendants have invaded every aspect of human existence in the 30-odd years since (Fig. 1).

More 8-bit Micro Articles

Check out these articles if you want to find out more about 8-bit micros and microcontroller history.

Measuring one-eighth by one-sixteenth of an inch, the 4004 had all of 2300 transistors. At the close of the 1960s, the term "large-scale integration," or LSI, had begun to be applied to the increasingly complex ICs flooding out of the semiconductor foundries. The 4004 was the clearest evocation yet of what LSI would ultimately come to mean. This fingertip-sized slab of silicon was as powerful as the 30-ton ENIAC computer built in 1946. And although they're not exactly computers on a chip, microprocessors perform many of the functions of the CPUs in the computers that came before them.

It's almost poetic justice that a calculator design was the microprocessor's first home. Calculators, digital watches, and other consumer devices were at the vanguard of electronic applications in the early seventies. The changes wrought by the explosion of IC technology into society reached in every direction, even into the engineering labs themselves. The calculators were the death of the engineers' beloved slide rules. The creators had to face their creations and, sometimes, they resisted them.

In society at large, technology wasn't always immediately accepted either. The 1970s can be thought of as the first decade in which consumer electronics applications carried as much clout, if not more, than military applications. A lot of adjustments came with the rapid dissemination of microprocessors into homes, businesses, and factories. Who hasn't struggled with programming a VCR? That minor inconvenience, which first appeared on the scene in mid-decade, was vexing enough. But technology was downright threatening to some. Could we really trust those computers to keep track of our bank accounts?

Will we lose our jobs to computerization? Scariest of all, what if a computer goes haywire and starts a nuclear war? These questions, and others, were raised by an anxious society that remembered all too well the rogue computer HAL in Stanley Kubrick's 1968 film, 2001: A Space Odyssey.

Despite the anxiety that microprocessors may have provoked, there was no putting them back in the box. For one thing, their size and programmability lent them to applications that were simply impossible using discrete IC approaches.

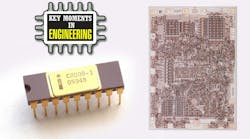

On the heels of the 4004, Intel quickly came up with the 8008, an 8-bit processor that further broadened the scope of applications for these machines (Fig. 2). More powerful and flexible than the 4004, the 8008, while still a limited device, was the only 8-bit processor available until the improved 8080 appeared in 1974. By mid-decade, a slew of microprocessors had been developed. Sixteen-bit microprocessors made their debut before the decade ended.

Over the course of the decade, microprocessors and their cousins, microcontrollers, appeared in scores of appliances, including microwave ovens and washing machines. Video games such as Pong were among the popular applications, sparking a huge industry that has been not only a voracious consumer of microprocessors, but also a cauldron of innovation for graphics and display technology to this day.

Microprocessors are powerful devices, but it was understood that they couldn't function without memory. By the late sixties, the concept of cache memory had been devised by IBM engineers and implemented using bipolar memories that were expensive and had small capacities. But in 1970, Intel rolled out a 1024-bit dynamic RAM that set the stage for later advances in memory devices.

That same year, Intel produced the first 2-kbit UV EPROM, launching a memory technology that would survive for 20 years (Fig. 3). With DRAMs and UV EPROMs on the scene along with the microprocessor, engineers had quite a bit to work with as they moved forward with computer development.

Developments in seemingly more mundane areas were key to the broad acceptance of microprocessors, memories, and other ICs in the seventies. Chief among these was packaging. Although it was not yet the critical element it would become in the 1990s, IC packaging had advanced steadily through the sixties to prepare the way for the larger die sizes in the LSI era.

The multichip module (MCM) made its first appearance on the scene in the seventies, helping to bridge the gap between the relatively primitive silicon process technology of the time and the integration that designers wished to achieve. MCMs were a means for engineers to pack more ICs and functionality onto a substrate and within the same leadframe. It wasn't as good as having all of the silicon on the same chip, but it was a meet-in-the-middle kind of approach that still resulted in improved performance compared with ICs packaged separately.

Advances in test equipment came rapidly, too. A lot of digital logic circuits were being designed and built, and a new class of instrumentation would be required to keep up with their mushrooming complexity. Along came the 1973 introduction of the logic analyzer by Hewlett-Packard. The logic analyzer was significant because it allowed designers to verify more complex digital systems at a much faster pace than ever before.

There's little doubt that the microprocessor was the single greatest development in circuit integration in the seventies. But other developments were taking place that led to much greater integration of discrete digital logic. Circuits were becoming so large and complex that designing logic from scratch with discretes was becoming too time-consuming for an industry that was seeing a shift into the shorter design cycles driven by consumer demands.

The first level of integration manifested itself as small arrays of bipolar transistors called masterslices. By 1974, the first CMOS gate arrays were introduced, presenting a threat to the discrete logic business. User-programmable logic arrays emerged the following year.

All of this sophisticated silicon began to strain the design infrastructure of the day. Throughout the sixties and into the seventies, transistor-level and early IC design was done pretty much by hand, with designers cutting sheets of rubylith to create lithography masks.

By the early 1970s, the circuits' complexity had outstripped the ability—and patience—of designers. The design-automation industry began its ascent. At the printed-circuit-board level, change came with the early Calma and Applicon workstation-based CAD systems, which for the first time allowed designers to enter board layouts into a computer in a fashion that could be edited.

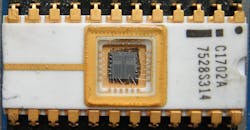

The progress in memories, microprocessors, and other supporting technologies set the stage for computing to move into the mainstream. In 1974, Ed Roberts, the owner of a struggling calculator company in Albuquerque, turned to the new technology in an effort to save his company. Roberts developed what would become known as the first personal computer, the Altair 8800, offering it in kit form to electronic hobbyists (Fig. 4).

There wasn't much to the Altair, though. There was no screen, no keyboard, and no software. Programming it was accomplished in machine code using a bank of toggle switches. To some, the machine's shortcomings represented an opportunity for innovation.

Two of Roberts' early customers, a student named Bill Gates and his friend Paul Allen, wrote an interpreter to enable the Altair to be programmed in Basic, which they then sold to Roberts. Roberts later hired Allen as a software developer. Gates and Allen went on, of course, to start a little company in Seattle.

Yet another young man who couldn't afford an Altair was inspired to design his own personal computer. Steve Wozniak, with the help of his friend Steve Jobs, worked in Jobs' family's garage until they got their project working. Like another pair of garage-shop entrepreneurs almost 40 years before, Bill Hewlett and Dave Packard, Wozniak and Jobs had a dream and wouldn't be denied. Their Apple Computer Company would evolve into one of the fastest-growing companies in what had become known as Silicon Valley.

As the microprocessor's development had kickstarted the memory IC business, so did the arrival of personal computers to spawn other new development paths. The machines needed software to run. Among the early popular applications were the Visicalc spreadsheet program and Wordstar word processor. The growth of the software industry made the new machines much more attractive to businesses and home users.

With software coming up to speed, another crucial need for PCs was data and program storage. Early in the seventies, IBM had introduced the 8-in. floppy disk. For the burgeoning PC industry, a more interesting version came in 1976 when the 5.25-in. floppy arrived. An inexpensive, removable storage media was just the thing for programs and data. In the days before local-area computer networking had become prevalent, removable and easily transportable media was essential for data to be moved from machine to machine.

Local-area networking was, however, already in the works. A researcher at Xerox's Palo Alto Research Center, Bob Metcalfe, had already completed work on a networking protocol that would form the basis for many, many miles of local-area networks—Ethernet. Metcalfe's brainchild still is in broad use today.

Just as the seventies were ending, the pieces were in place for personal computing to go mainstream. Meanwhile in Japan, the first cellular telephones were coming on line. In both Europe and the U.S., fiber optics trials had been completed. These trends, seemingly disparate, would eventually coalesce as the computer and telephone took their initial steps along the path to convergence.

Article published 10/20/2002. Updated 8/28/2024